Tweetable Sentence:

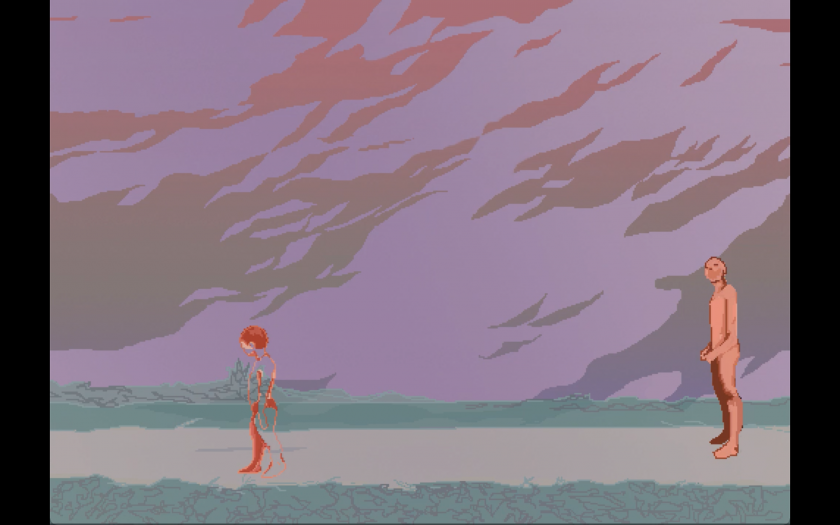

“Butter Please: A gaze-based interactive compilation of nightmares which aims to mimic the sensation of dreaming through playing with your perception of control. ”

Overview

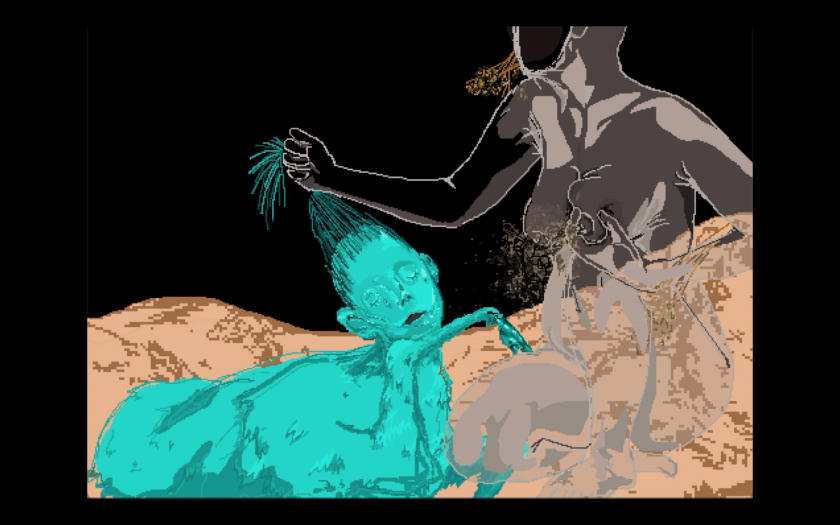

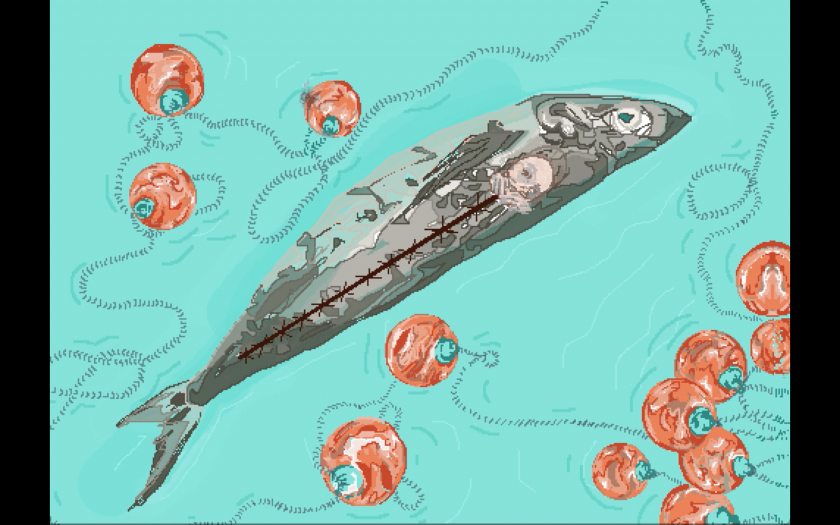

Butter Please is an interactive sequence of nightmares transcribed during a 3-month period of insomnia, and the result of an exacerbated depression. The work is an exploration of the phenomenological properties of dreaming and their evolutionary physiology, in addition to being a direct aim at marrying my own practices in fine art and human-computer interactions. The work finds parallels with mythology and folklore; the way people seem to ascribe a sense of sentimentality to such fantastical narratives.

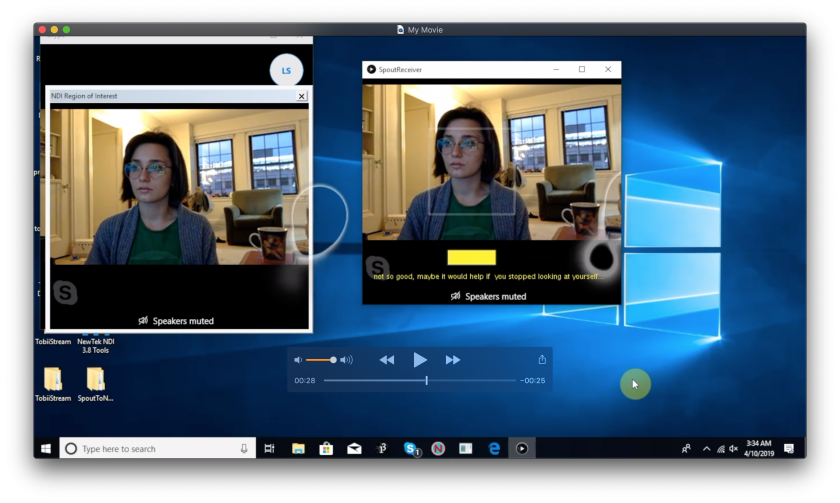

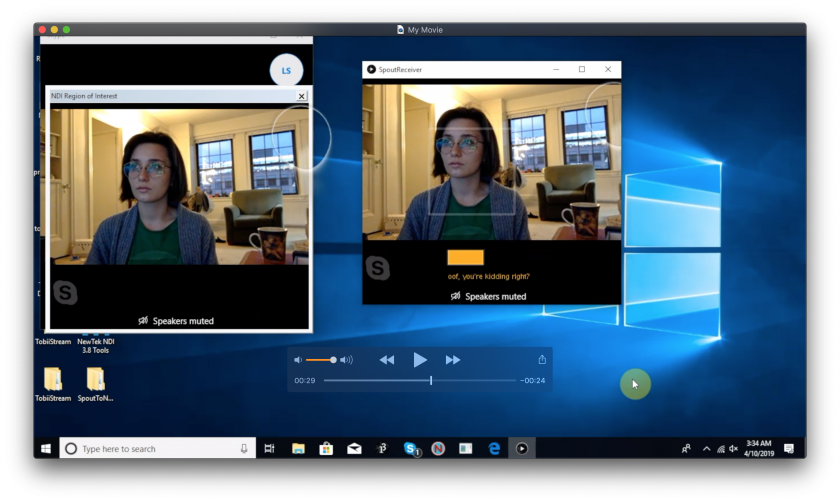

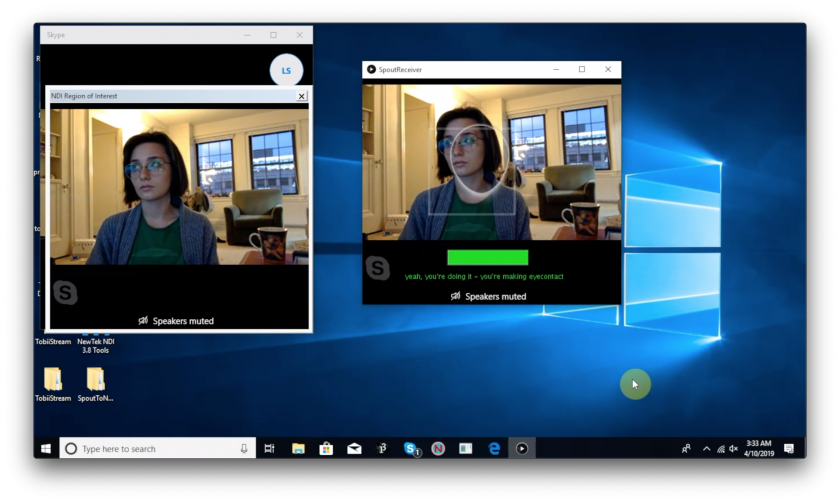

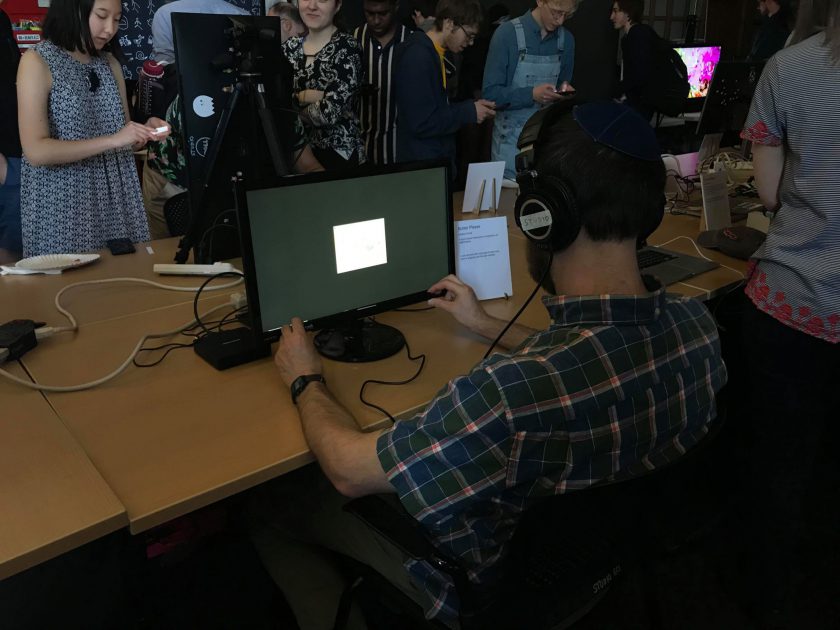

Butter Please mimics the sensation of dreaming, through playing with your perception of control. Your gaze (picked up via the Tobii Eyetracker 4c) is what controls how you move through the piece, it is how the work engages with, and responds to you.

Narrative

Butter Please, as mentioned above, was inspired by a 3-month period of insomnia I experience in the midst of a period of emotional turmoil; the nightmares resulted from an overwhelming external anxiety brought on by a series of unfortunate events and served to only exacerbate the difficulty of the time. The dreams themselves became so bad for me that I would do everything in my power to avoid falling asleep, which in turn birthed a vicious cycle. My inspiration for pursuing this was as follows; to try to dissect the experience a bit through replicating the dreams themselves (all of which I vividly transcribed during the time that this happened, as I thought it would be useful to me later on).

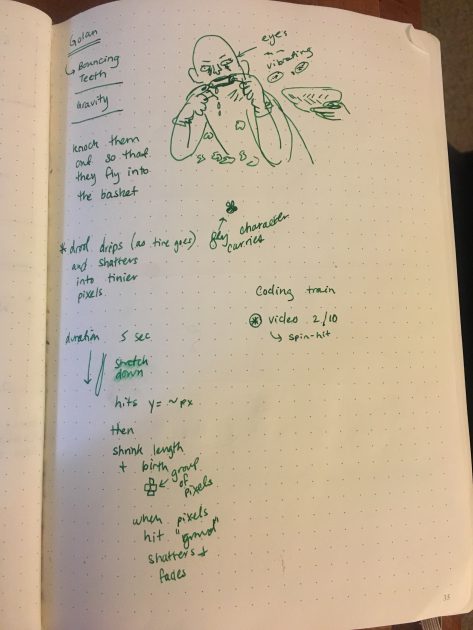

This project was something I felt strongly enough about to want to pursue to the end, so I decided to work on it with the hopes of displaying it in the senior exhibition (where it presently resides). In addition to being a mode for me to process such an odd time in my life, it also became a way to experiment with combining my practice in Human-Computer Interaction and Fine Art; creating a piece of new media art that people could engage and interact with in a meaningful way. It was a long process – from drawing each animation and aspect of the piece on my trackpad with a one-pixel brush in photoshop (because I am a bit of a control-freak) to actually deciding on the interactive portion of the piece (a.k.a. using the eye tracker and specifically gaze as the sole mode for transition through images)… and beyond that, even deciding on how to present it in a show. I think that I had the most difficulty with getting things to run quickly, simply because there was so much pixel data being drawn and re-drawn from scene to scene. It was a bit difficult to glean the eyetracker data at first from the Tobii 4c, but as soon as I managed to do that the process of coding became much smoother. In this way the project did not meet my original expectation for fluidity and smoothness… On the other hand; it exceeded my original expectations on so many levels: I never would have expected to have coded a program which utilized an eye-tracker even just 4 months ago when I was searching for the best mode of interaction with this piece… I think that being in this class really developed my ability to source information for learning how to operate and control unusual technology, and for that I am actually pretty proud of myself (especially knowing how unconfident and how little I felt I knew at the beginning of the semester…)

In all honesty, I’m elated to have gotten the project to a finished looking state; there were a few points were I wasn’t sure that I would be able to create it in time for the senior show.

That being said I am extremely indebted to Ari (acdaly) for all of the help she provided to me in working out the kinks of the code (not to mention the tremendous moral support she provided to me…); I really can’t thank her enough for her kindness, and patience to work through things with me; and I couldn’t have finished it on time in the level of polish it exists without her help.

Beyond that I really owe it to all of my peers for the amazing feedback that they provided throughout the year (both fellow art majors in senior critique seminar from Fall/Spring semester in addition to the folks from this Interactive Art course). It’s because of them that I was able to refine things to the point they are at.

Golan is also to thank (that goes without saying) for being such an invaluable resource and allowing me to borrow the tech I needed in order to make this project come to fruition.

Aaaaaaand finally I just want to give a shoutout to Augusto Esteves for creating an application to transmit data from the Tobii into processing (that saved me a vast amount of time in the end.)

________________________

Extra Documentation

Butter Please in action!

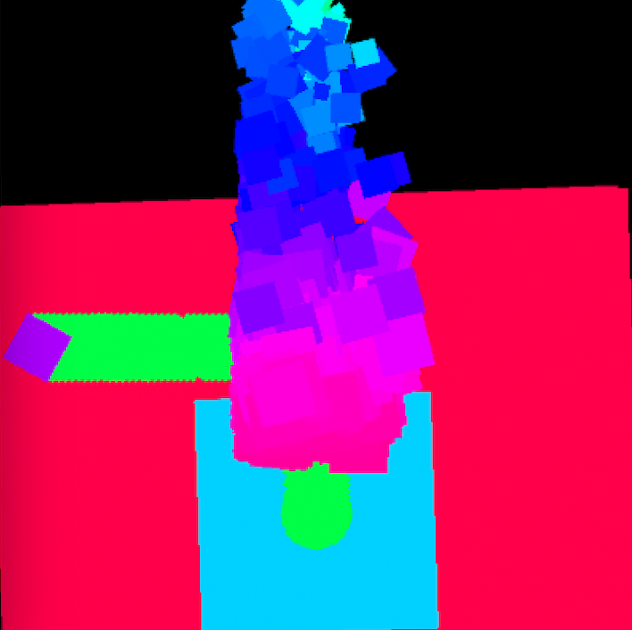

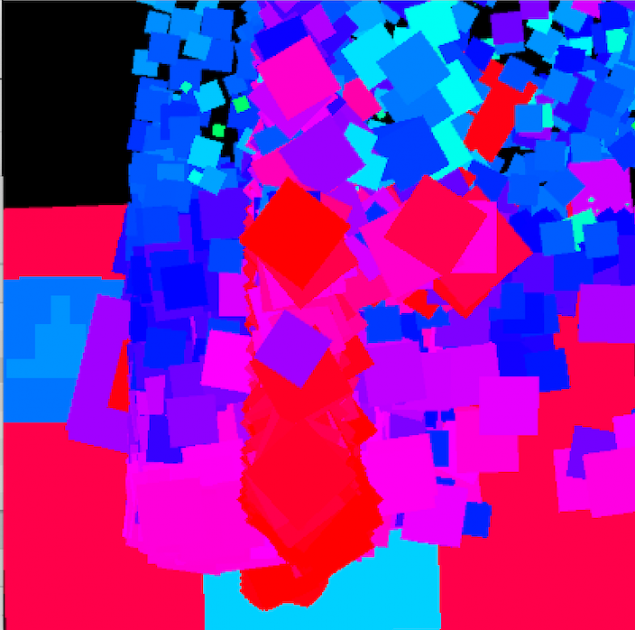

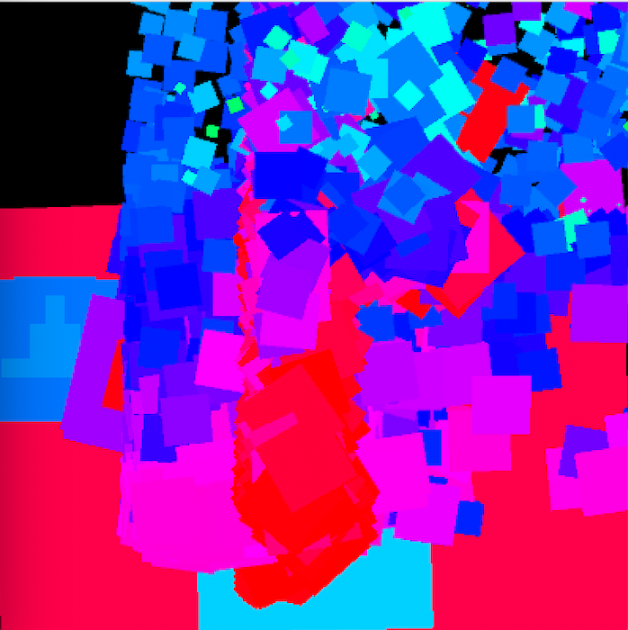

Shots from the piece:

________________________