My project idea is “Sketch a Level” (name pending) – a rig where you can take a piece of paper, sketch a drawing on it (say, a maze), and then have the computer read the drawing and project characters onto the paper. You would control your character with the movement of your finger on the paper, or the movement of the pencil, I haven’t decided yet.

The first item on my list is the game rig. I get that done and the rest is programming. Based off my last project, I think it would be a lot easier on me if I could write my program using the actual setup. Last time I wrote my program using a mock up at CMU, which wasn’t the same as my setup at home… which ultimately, just made things annoying and time consuming.

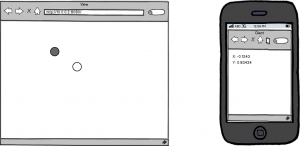

Sketch for the game rig, I’m probably going to use our old glass office table.

Another sketch, with some ideas on what I need to do.

Shape recognition. Portals, death traps, and other special symbols.

I’m thinking of using one of our office tables at home, and using a handy old projector to project the characters from the bottom up (onto the glass and paper). Then use a simple rig on the table to hold the camera, kind of like a lamp, but not.

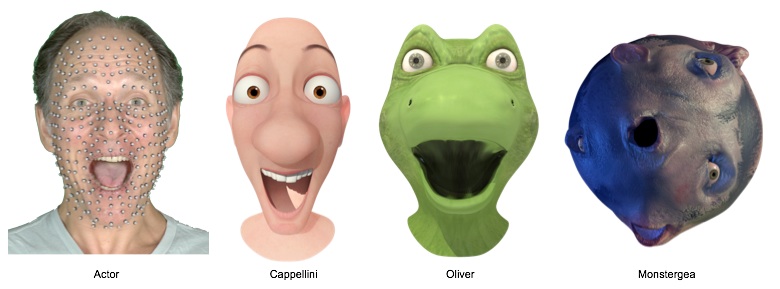

Inspiration… Mm.

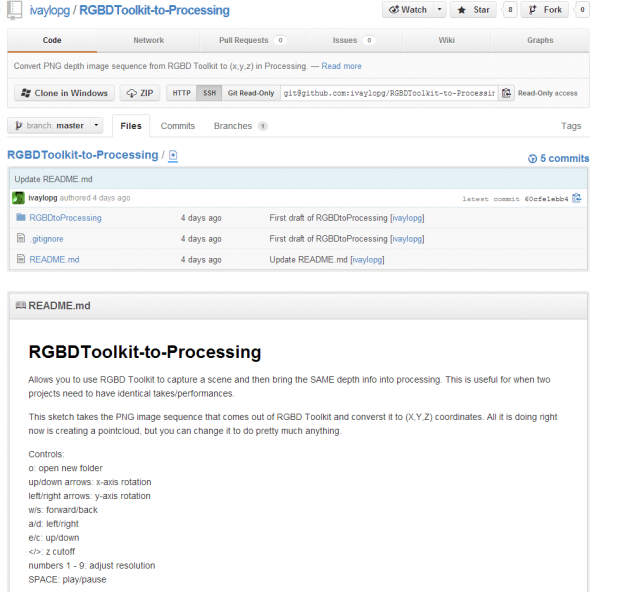

SketchSynth is a project done last year in IACD. My project will probably be along the same lines as this one, in fact, they’re practically identical excluding the content. He did it to create a GUI, I’m doing it for a game.

Notes to self:

- Setup table rig (glass top, camera holder, and projection holder). Camera looks down, projector projects up through glass. Line up camera image with projection. The frosted film on the glass should work.

- Mark off area to sketch, needs to be consistent, otherwise I will have to calibrate for every session. Sketch needs to have a consistent black border due to the way the collision maps are generated. A piece of black acrylic lasercut and fixed to the table should do.

- I need to re-program the AI to be more intelligent. Best suggestion I got was to implement individual personalities, similiar to how the PacMan game does it.

- Re-configure preexisting game setup. Basically, fix the GUI for this application.

- Work on shape recognition (hard) or color recognition (easy). Shape recognition could turn out to be a pain for me, especially since my programming experience is… well… 2 semesters, not even. I’ve done some reading and it’s not promising. Color recognition is easy, I have dappled with it before. I could have it so certain colors mean different things: a portal, a death trap, a power pill etc.

- Methods of control will vary as time goes on. Will start with a keyboard, the easiest means of interaction. Eventually I hope to do one of the following: finger recognition, the character traces the path of your finger. Has been done with a webcam, can also be done with a Kinect. I haven’t done it personally, though I have done hand tracking on the Kinect before. Another, easier route is to do color tracking. I could have a pencil that is a particular color not present on the paper or setup, the character could follow the pencil.

Questions answered:

- Are there an unlimited amount of portals, death traps, and other special symbols? No, I will probably set it up so the computer recognizes a maximum of say… 3.

- If you lift your finger off the paper and then place it on another portion of the paper, will the character teleport? No, I will probably have it setup so the character moves through the maze to the position of your finger. There will be no instant teleportation, except through the drawn portals.

- If the character enters one portal, and there are say 5, which one will the character pop out of? Are the portals linked? It will be random, the character will enter one portal and randomly pop out another. It’s a game of chance.

- Any size paper? No, probably not. I’m thinking standard letter size or 11×17.

- Scale of symbols and maze, how does that affect the characters? I’m not sure. It would be difficult for me to program something with variable size… At least, well… I don’t know. I guess I could try to measure the smallest gap and then base the character size off the measured gap. Then the characters would re-size according to the map. I’ve never programmed something like that before, so I’m not sure if what I am thinking would work.