Facetime Comics

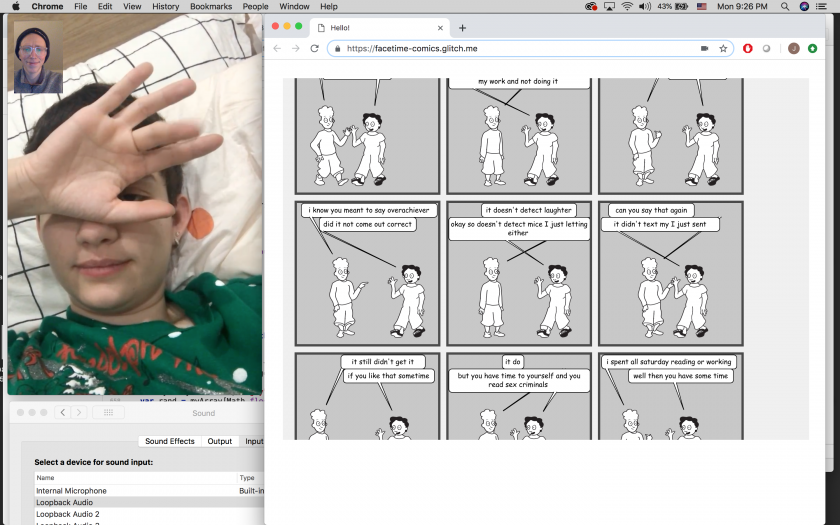

This project seeks to update Microsoft’s Comic Chat for transcribing video chat (Facetime-non specific).

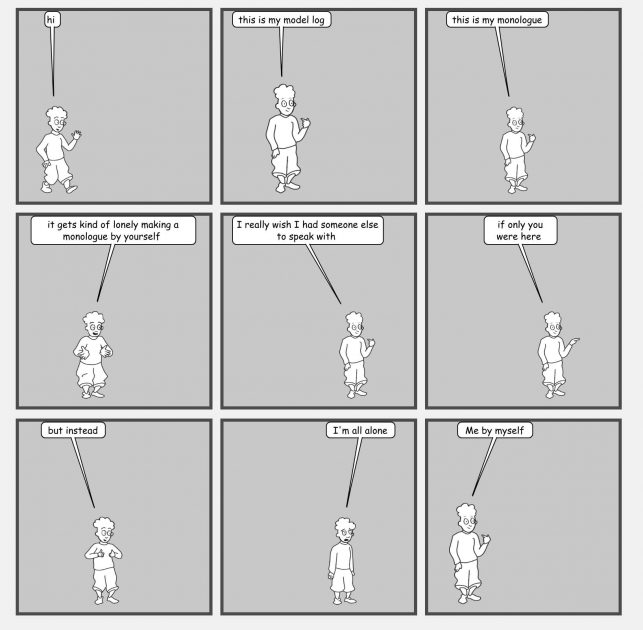

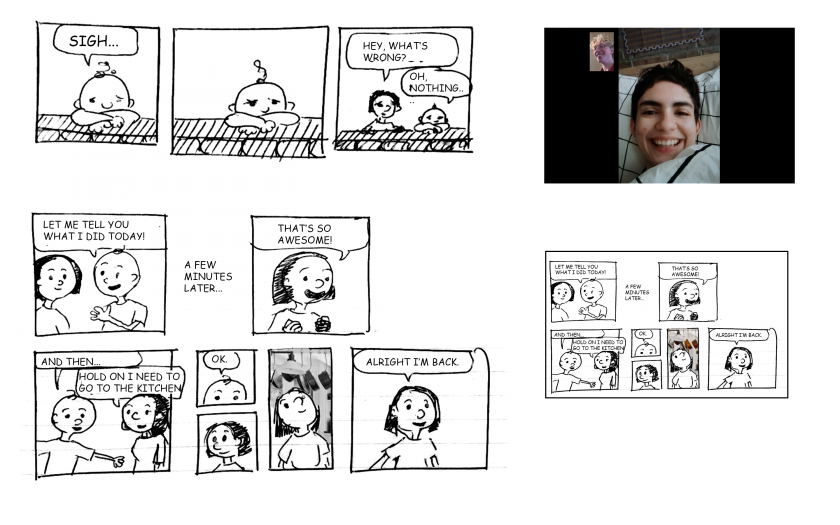

I’ve developed it to generate a comic book that features cartoons based on myself and my girlfriend. (Although, as in the above example, it can just be me in the comic.)

The software lays out characters, sizes them, lays out speech bubbles, and poses the characters dynamically.

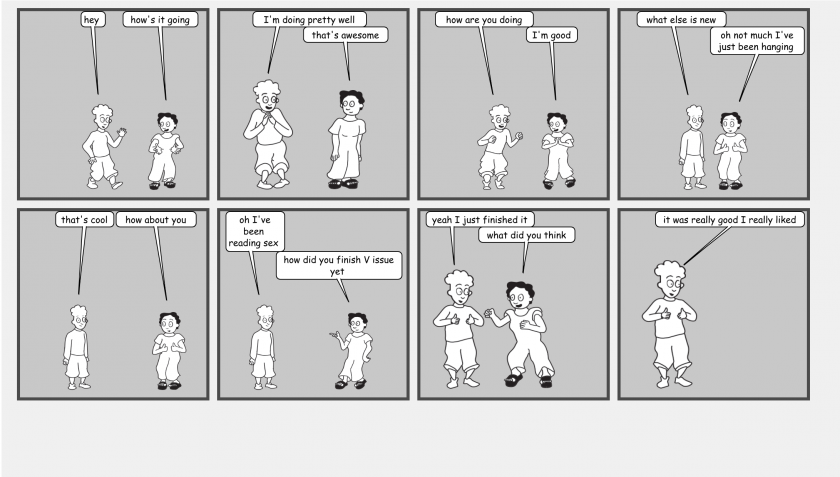

The basis for this project looks like this:

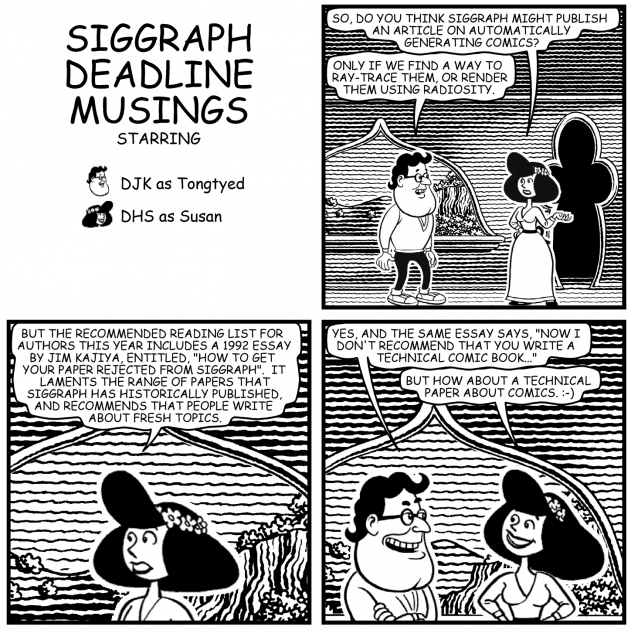

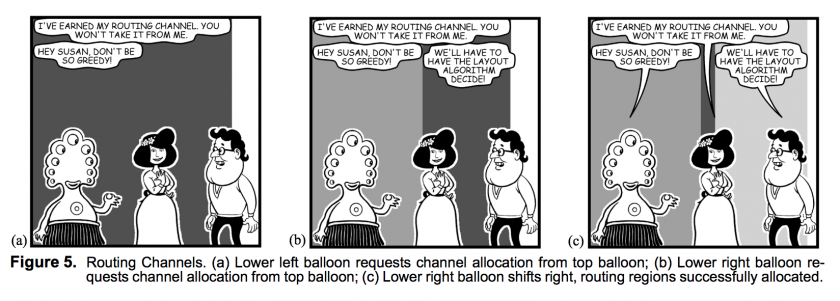

Microsoft deployed this feature for typed web chats in 1996. They also produced a paper documenting that project and how they accomplished some of its technical features, like using routing channels to layout speech bubbles:

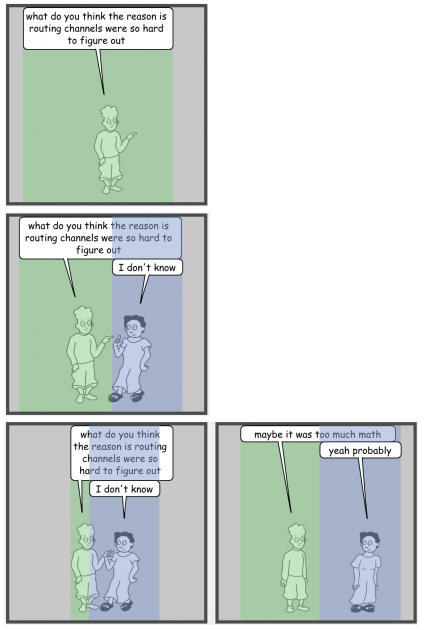

Which, I’ve been able to more or less implement myself:

The Microsoft project also tried to attain a semantic understanding of its conversations, and respond accordingly. While it and my program respond to speaker input based on a limited library of words (waving, for example, when someone says “Hi”), a deeper understanding of conversational meaning was something the Microsoft team could not accomplish and that I failed to realize as well. I do think it’s possible today, however, given the availability of wider libraries for programmatically generated language, to respond much more deeply to the spoken words in a conversation.

My character, responding to the spoken word, “love.”

My other inspiration for this work was Scott McCloud’s Understanding Comics, particularly his chapter on “Closure.”

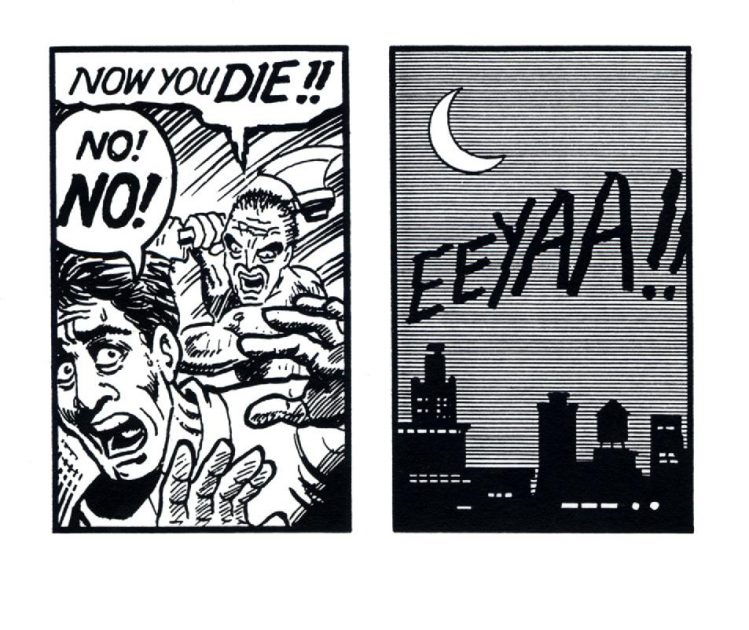

We infer that the attacked happened in the space in between the panels.

McCloud considers the space in between panels, and how we read that space and infer what’s happening, as a unique quality of comics. He calls this “closure.” McCloud says that it’s present in video too, but at 24fps, the space in between “panels” is so little that the inferences we make between them are completely unconscious. Because of this, I think comics are a fitting transcription for video chat, as opposed to straight recording, because by limiting the frames shown, they open up the memory of the conversation to new interpretations.

In evaluating my project, I wanted to implement a lot more. I wanted, for example, to base the emotions expressed by my characters on realtime face analysis or a deeper understanding of the meaning of the text. I also didn’t get to variable panel dimensions, and this is a small sign, for me, that I didn’t get past just recreating Microsoft’s project. It assumes an Internet-comic aesthetic right now, and I wish it had more refinement, and maybe more specificity to my style; there’s a little Bitmoji in the feeling of the character sheet above, and I don’t know how I feel about that.

From my sketchbook.