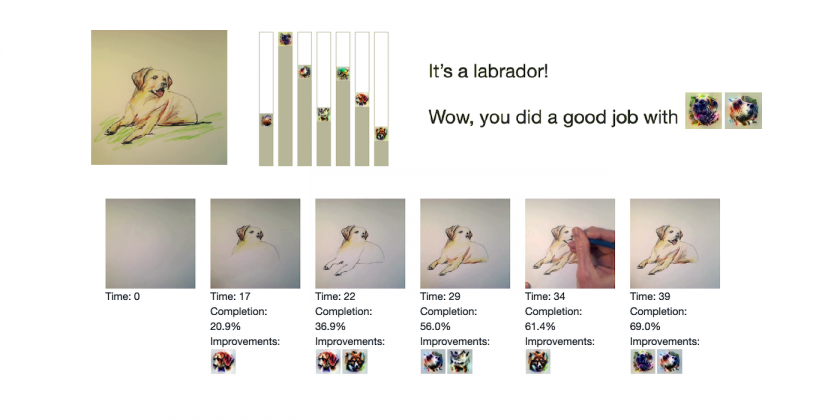

A conversational interaction with the hidden layers of a deep learning model about a drawing.

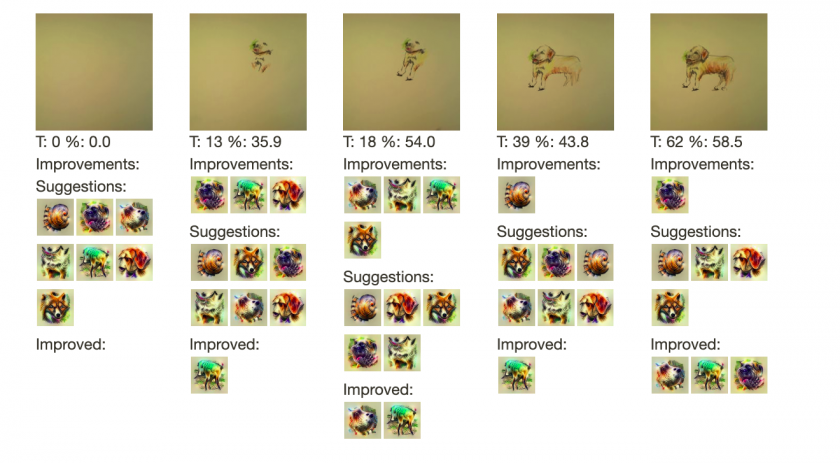

As an artist draws a dog, a machine gives friendly suggestions and acknowledgement about what parts are missing, have recently been improved, or seem fully drawn. It provides text mixed with visualization icons as well as continuous feedback in the form of a colorful bar graph.

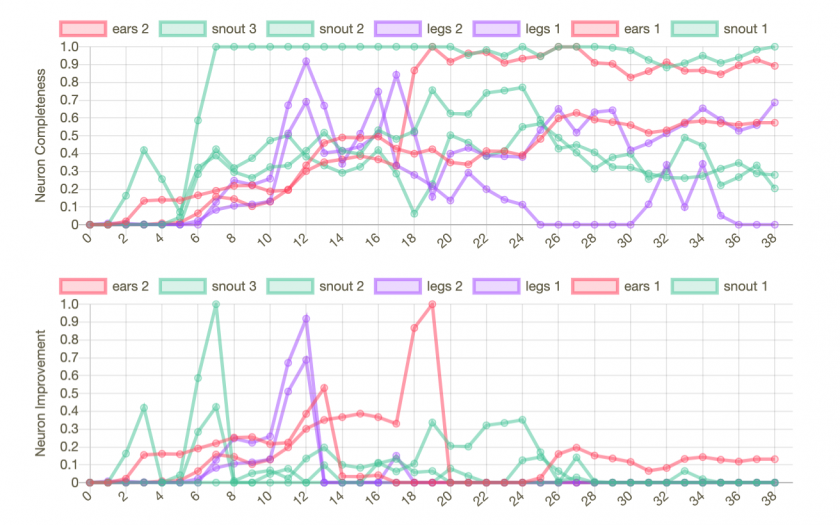

The machine’s understanding of the world is based on the hidden layers of a deep learning model. The model was originally trained to classify whole entities in photos, such as “Labrador retriever” or “tennis ball.” However, during this process, the hidden layers end up representing abstract concepts. The model creates its own understanding of “ears,” “snouts,” “legs,” etc. This allows us to co-opt the model to communicate about dog body parts in half-finished sketches.

I was inspired to create this project based on ideas and code from “The Building Blocks of Interpretability.” I became interested in the idea that the hidden layers of machine learning contain abstractions that are not programmed by humans.

This project investigates the question, “Can the hidden layer help us to develop a deeper understanding of the world?” We can often say what something is or whether we like it or not. However, it is more difficult to explain why. We can say, “This is a Labrador retriever.” But how did we come to that conclusion? If we were forced to articulate it (for example in a drawing) we would have trouble.

Much of machine learning focuses on this exact problem. It takes known inputs and outputs, and approximates a function to fit them. In this case we have the idea of a dog (input) that we could identify if we saw it (output), but we do not know precisely how to articulate what makes it a dog (function) in a drawing.

Can the hidden layers in machine learning assist us in articulating this function that may or may not exist in our subconscious? I believe that their statistical perspective can help us to see connections that are we are not consciously aware of.

This project consists of a Python server (based on code from distill.pub) that uses a pre-trained version of Google’s InceptionV1 (trained on ImageNet) to calculate how much a channel of neurons in the mixed4d layer causes an image to be labeled as a Labrador retriever. This attribution is compared across frames and against complete drawings of dogs. Using this information, the machine talks about what dog parts seem to be missing, to be recently improved, or to be fully fleshed out.

This project is successful at demonstrating that it is possible to use real-time comparison of hidden layers to tell us something about a drawing. On the other hand, it is extremely limited:

- The neuron channels are hand-picked, creating an element of human intervention in what might be able to arise purely from the machine.

- The neuron channel visualizations are static, so they give little information about what the channels actually represent.

- It only tells us very basic things about a dog. People already know dogs have snouts, ears, and legs. I want to try to dig deeper to see if there are interesting pieces of information that can emerge.

- It is also not really a “conversation,” because the human has no ability to talk back to the machine to create a feedback loop.

In the future, I would like to create a feedback loop with the machine where the human specifies their deepest understanding as the labels for the machine. After the machine is trained, the human somehow communicates with the hidden layers of the machine (through a bubbling up of flexible visualizations) to find deeper/higher levels of abstraction and decomposition. Then, the human uses those as new labels and retrains the machine learning model with the updated outputs… and repeat. Through this process, the human can learn about ideas trapped in their subconscious or totally outside of their original frame of thinking.

Thanks to code examples and libraries from:

https://colab.research.google.com/

https://github.com/jhuckaby/webcamjs