Important Contribution: OFX Timeline by James George

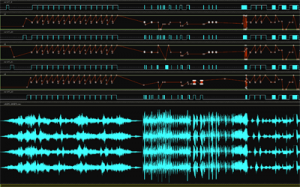

This is an add on that lets you modify code parameters with a timeline and key frame based system. It takes inspiration from big expensive packages like After Effects, Premier, and Final Cut, but it is reusable light weight.

I have a feeling that there is way more to this add on than what is demoed on the video, but if I am right in think you can code it into whatever project you are working on it sounds like it could safe a lot of time and possibly give adobe a run for their money. I would really like to see an altered timeline that allows you to stack layers and access them through an input variable.

Quick Sketch: Screen Capture to Sound by Satoru Higa

This app captures the appearance of the window behind it and turn it into a texture. The color of the texture then determines the pitch and volume of the sound.

http://www.creativeapplications.net/sound/capture-to-sound-by-satoru-higa/

this is a bit of a non sequitur project. What is the relationship between the appearance of the desktop and the action of making sound? I think this in an example of a project that make correlations for no conceptual reason. This project might be interested if the window functioned as a lens that somehow revealed or transformed the space beneath it.

Artistic Prowess: Forth by Karolina Sobecka

Forth is public installation that simulates the an endless ocean and groups of people traversing that ocean in life boats. The weather, sound, and amplitude of the waves is influenced by the current weather in its place of installation.

This piece was commissioned for a academic space where they used simulation. I think Sobecka succeeded in creating that allegorical aesthetic in this piece. I think it is particularly successful as a commissioned piece where the patrons had very specific criteria. I found the process of modifying a game engine interesting. This piece relates to Brody Conrad’s Elvis Piece.

The system can provide an alternate reality space that generates playful and natural interaction in an everyday setup for multiple users. The goal is to create a second surface on top of the reality, invisible to the naked eyes could generate a real-time spatial canvas on which everyone could express themselves. The system can create an interesting and new collaborative user experience and encourages playful content generation andcan provide new ways of communicating within everyday environment such as cities, schools and households.

The system can provide an alternate reality space that generates playful and natural interaction in an everyday setup for multiple users. The goal is to create a second surface on top of the reality, invisible to the naked eyes could generate a real-time spatial canvas on which everyone could express themselves. The system can create an interesting and new collaborative user experience and encourages playful content generation andcan provide new ways of communicating within everyday environment such as cities, schools and households.