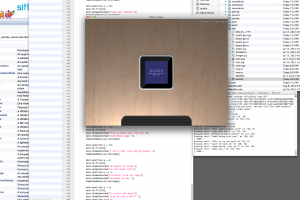

Originally by Camille Utterback and Romy Achituv, I was able to run “Text Rain” in Processing. It was my first time back to Processing in a while, but I was able to figure it out after some help and practice. I simple indexed the camera’s pixels and went about it the brightness() way. Fairly reliable and quite a lot of fun getting it going. I think a performance of my lip-singing my favorite song is in order for a finished piece.

Text Rain – Re Do from Nathan Trevino on Vimeo.

My code is here.

//Nathan Trevino 2013

//Text Rain re-do. Original by Camille Utterback and Romy Achituv 1999

//Processing 2.0b7 by Nathan Trevino

//Special thanks to the processing example codes (website) as well as Golan Levin

//=============================================

import processing.video.*;

Capture camera;

float fallGravity = 1;

float fallStart = 0;

int threshold = 100;

Rain WordLetters[];

int myLetters;

//==============================================

void setup() {

//going with a larger size but am giving up speed.

size(640, 480);

camera = new Capture(this, width, height);

camera.start();

String wordString = "For all the things he could lose he lost them all";

myLetters = wordString.length();

WordLetters = new Rain[myLetters];

for (int i = 0; i < myLetters; i++) {

char a = wordString.charAt(i);

float x = width * ((float)(i+1)/(myLetters+1));

float y = fallStart;

WordLetters[i] = new Rain(a, x, y);

}

}

//==============================================

void draw() {

if (camera.available() == true) {

camera.read();

camera.loadPixels();

//Puts the video where it should be... top left corner beginning.

image(camera, 0, 0);

for (int i = 0; i < myLetters; i++) {

WordLetters[i].update();

WordLetters[i].draw();

}

}

}

//===================================

//simple key pressed fuction to start over the Rain

void keyPressed()

{

if (key == CODED) {

if (keyCode == ' ') {

for (int i=0; i < myLetters; i++) {

WordLetters[i].reset();

}

}

}

}

//=============================================

class Rain {

// This conains a single letter of the words of the entire string poem

// They fall as "individuals" and have their own position (x,y) and character (char)

char a;

float x;

float y;

Rain (char aa, float xx, float yy)

{

a = aa;

x = xx;

y = yy;

}

//=============================================

void update() {

//IMPORTANT NOTE!

// THE TEXT RAIN WORKS WITH A WHITE BACKGROUND AND THE DARK AREAS

// MOVE THE TEXT

// Updates the paramaters of Rain

// had some problems here for the pixel index, but a peek at Golan's code helped

int index = width*(int)y;

index = constrain (index, 0, width*height-1);

// Grayscale starts here. Range is defined here.

int thresholdGive = 4;

int thresholdUpper = threshold + thresholdGive;

int thresholdBottom = threshold - thresholdGive;

//find pixel colors and make it into brighness (much like alpha channeling video

// or images in Adobe photoshop or AE)

float pBright = brightness(camera.pixels[index]);

if (pBright > thresholdUpper) {

y += fallGravity;

}

else {

while ( (y > fallStart) && (pBright < thresholdBottom)) {

y -= fallGravity;

index = width*(int)y + (int)x;

index = constrain (index, 0, width*height-1);

pBright = brightness(camera.pixels[index]);

}

}

if ((y >= height) || (y < fallStart)) {

y = fallStart;

}

}

//============================

void reset() {

y = fallStart;

}

//=======================================

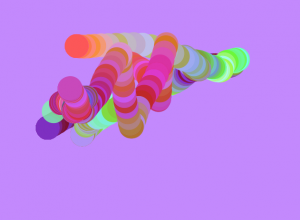

void draw() {

// Here I also couldn't really see my letters that well so I

// used Golan's "drop shadow" idea and some crazy random colors for funzies

fill (random(255), random(255), random(255));

text (""+a, x+1, y+1);

text (""+a, x-1, y+1);

text (""+a, x+1, y-1);

text (""+a, x-1, y-1);

fill(255, 255, 255);

text (""+a, x, y);

}

}