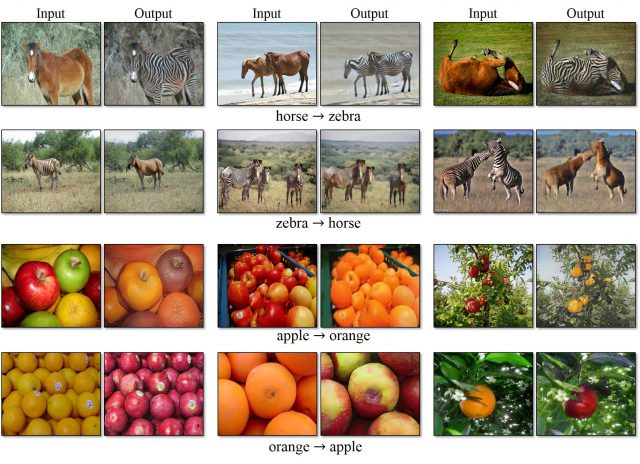

I recently got interested in ML algorithms that alter or augment existing images. I was intrigued by the opportunities that these algorithms can expand our existing perception of the world.

Colorization of grayscale images

What first got into my mind was ultrasound photograph of the fetus. This is an image that is limited to be seen in black and white. How would it feel different if we are able to see this in color? Unfortunately, since there is no ground truth for this image yet, the colorization wouldn’t work for this. However, it revealed how there are things that we desire to see in color.

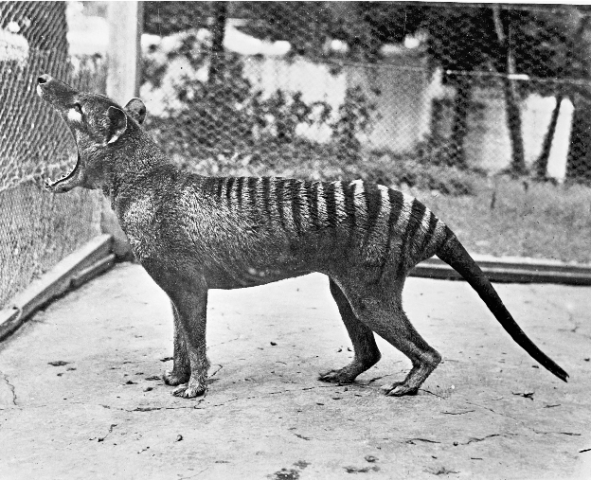

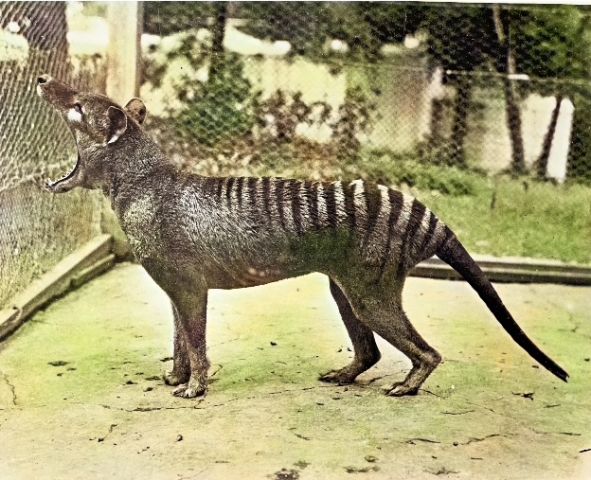

Another idea came to mind, which is to colorize images of subjects that no longer exist, such as extinct animals. Following is my first attempt at colorizing a photograph of a Tasmanian tiger, which went extinct around 1936:

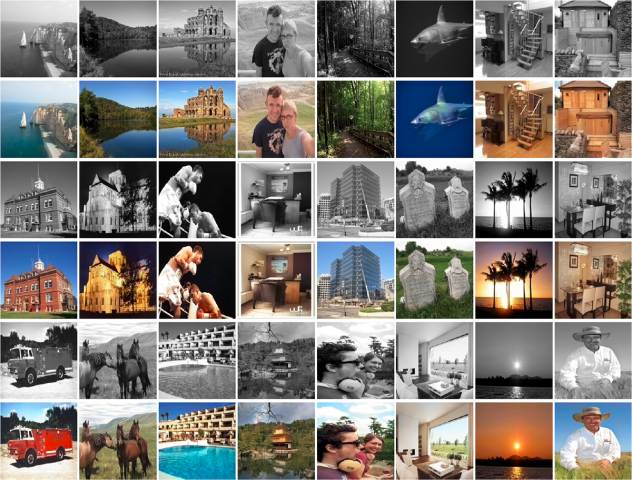

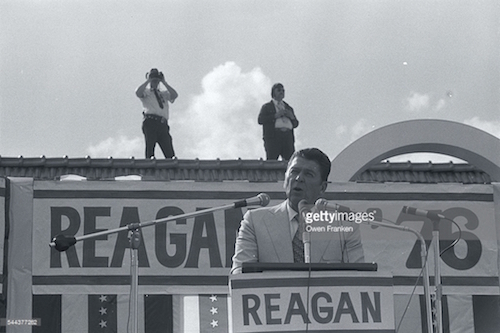

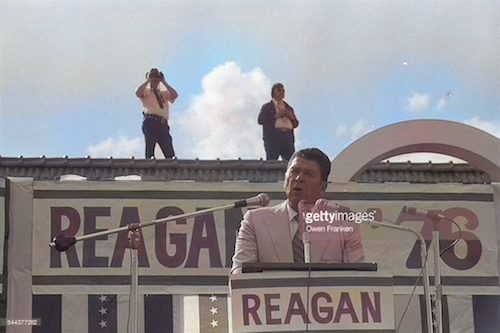

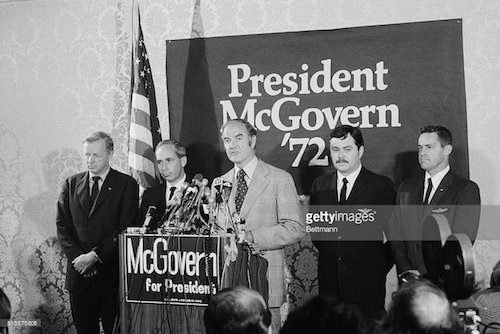

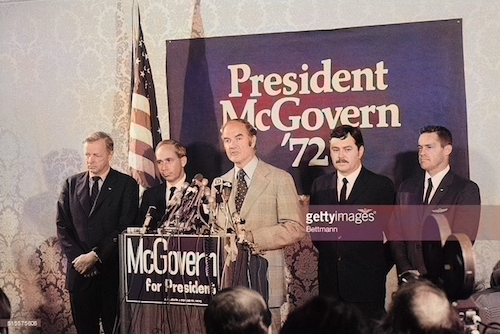

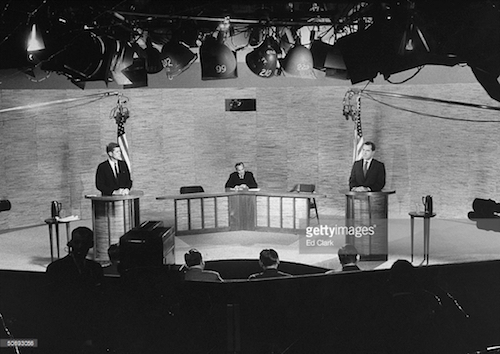

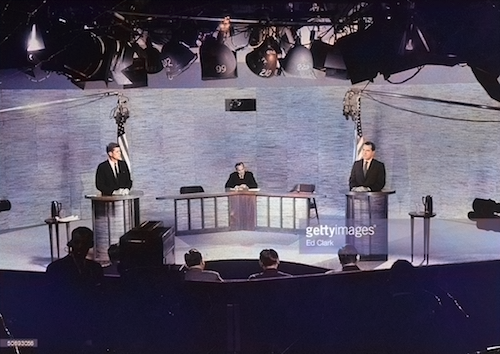

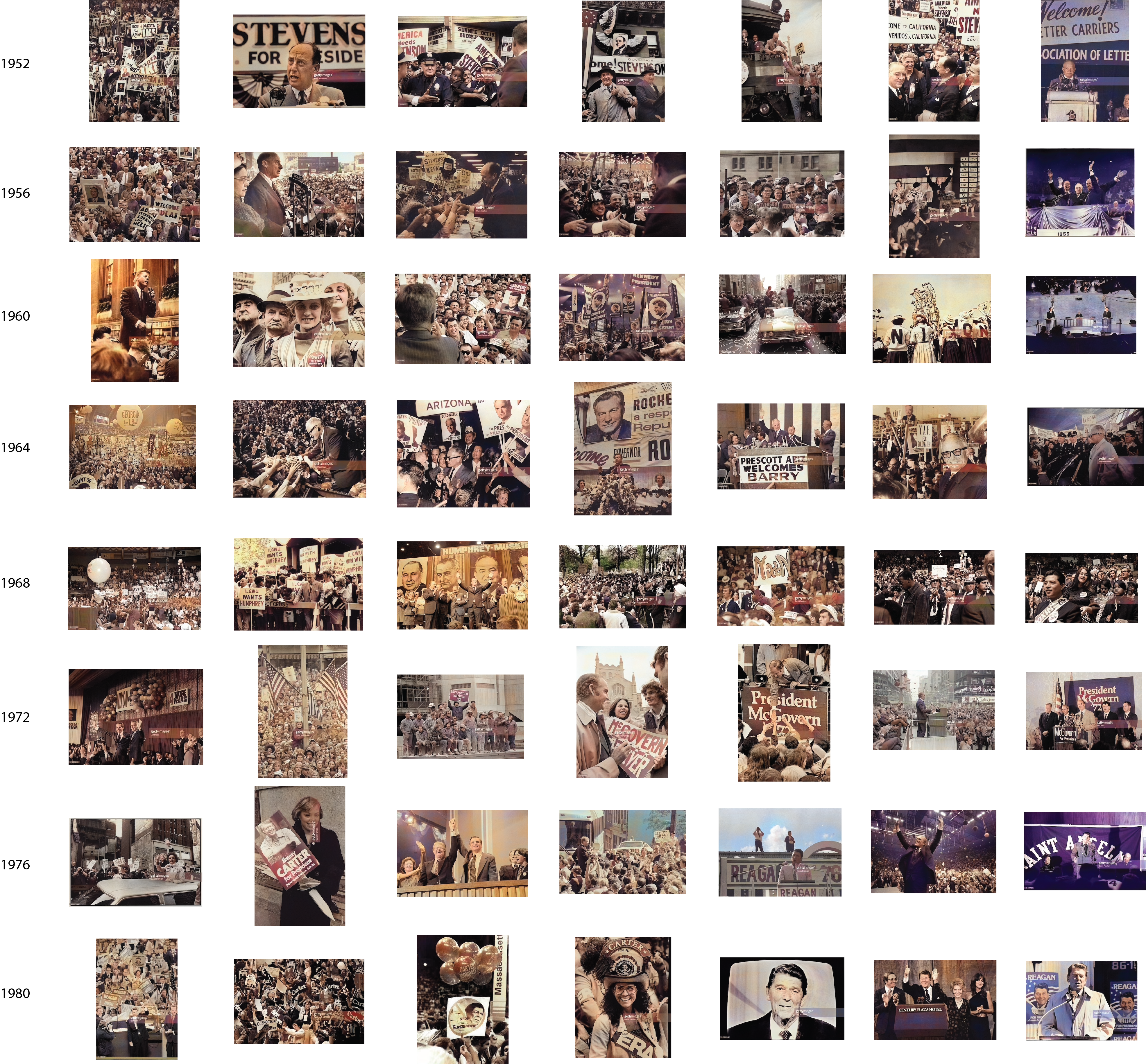

Then I questioned how the use of color influences our perception and decisions. How would colorization of existing images hint novel insights that wouldn’t have been noticed otherwise? I recognized how colors used in political campaigns were obscured in black and white photographs whereas the use of red and blue today is very explicit in these campaigns. It triggered my curiosity for how these photographs would appear differently when they are in color. I scraped images of U.S. presidential election campaigns from 1952-1980 at Getty Images collection and ran the colorization script.

While some images worked better than the other, the effect that colors contribute to the portrayal of election campaigns was stark. I made a chart to see if there were any patterns or trends.

I think it would be also interesting to arrange these charts based on other variables such as candidates or parties. It would have been also better if I had a larger collection of images of election campaigns, which I could have used a training set to get better results.

Anonymous comments from the group critique, 4/13/2017:

The conceptual ideation & desires driving this work are interesting, and well explained in depth.

You really stepped up your game!

Very poetic

Great explanation, terrific research and presentation of references +

I feel like this piece needs a sharp edge of some sort — you have done the research really well, and know what you want, now you just need to turn it into a piece and direct your energy into one direction. +

^ feels like a strong preface to additional work +

Wonderful chart – colorization through the decades

Was the network trained on vintage images? They have color shifts that are similar to early color film stocks, not super true to life colors.

I love how fluid your process was. One thing led to another and it felt like you were exploring.

Do you know how this works? Why would it colorize the debate so well but not colorize the fetus/electron microscope stuff at all?

-> because it has seen people and stuff and knows how they are coloured, whereas it has never seen a fetus before (there are no colour fetus images to train the network on)

^ in that case, would be interesting to train it on hand-colorized SEM images and have it colorize them itself

-> that would be cool

Dan Goods’ – Art + Data exhibition at Pasadena Museum of Art – USC professor reference

Dan Goods: http://www.directedplay.com

http://fraserlab.usc.edu/mri/