One hundred balls – One trajectory

The laws of physics can often appear to be very mysterious. In particular, mechanics and the way objects are moving is not necessarily intuitive. The human eye cannot look into the past and it often need helps of equations, sketches or videos to capture the movement that it just saw.

In this project I decided to document and capture the simple event of a ball thrown in the air. My goal was here to recreate the effect seen in the picture above: get a sense of the trajectory of the ball. But I wanted to get away from the constraint of using a still camera and decided to use a moving camera mounted on a robot arm.

Inspirations

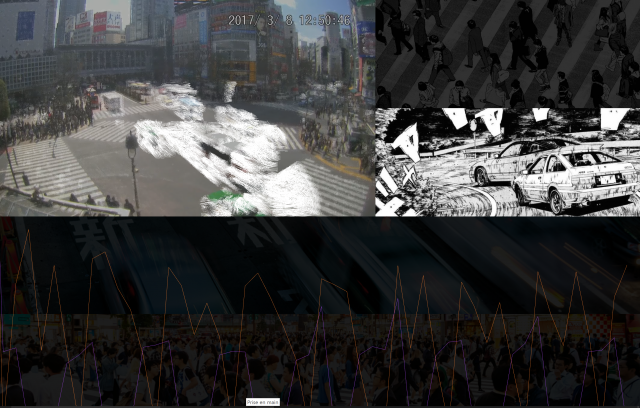

This project started for me with the desire of working with the robot arm in the Studio. The work of Gondry then inspired my: if a camera is mounted on the robot and if the robot is on a loop, superimposing the different footages allows the backgrounds to be identical while the foregrounds seem to happen simultaneously, although shot at different times.

Gondry / Minogue – Come into my world

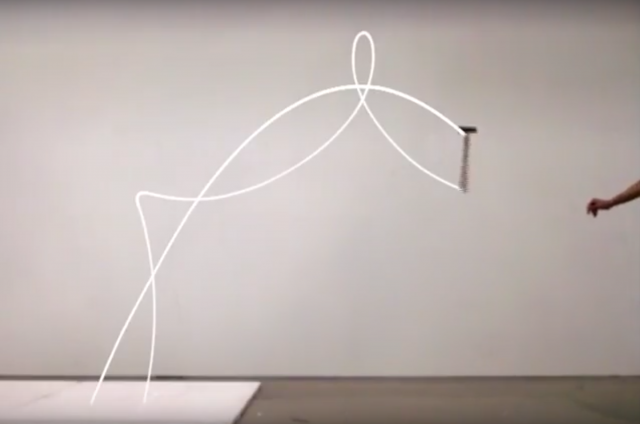

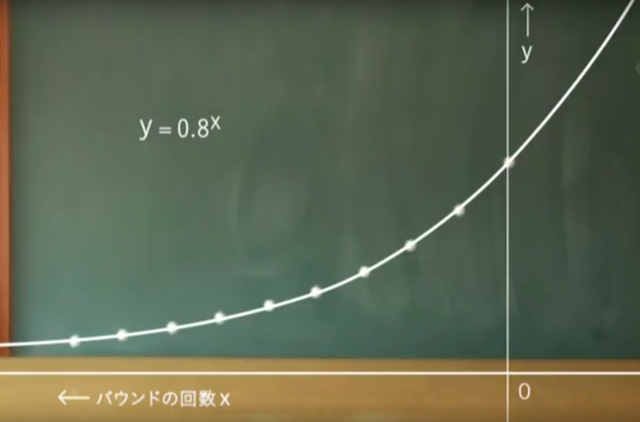

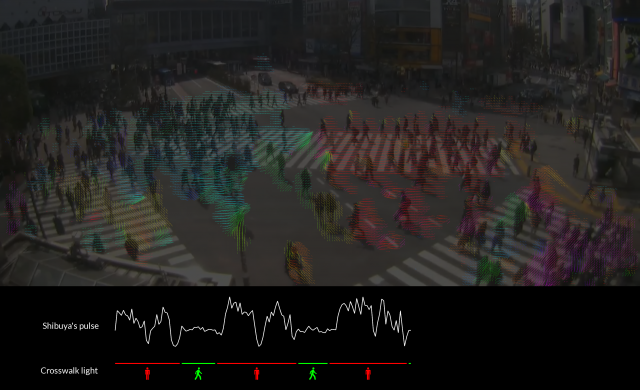

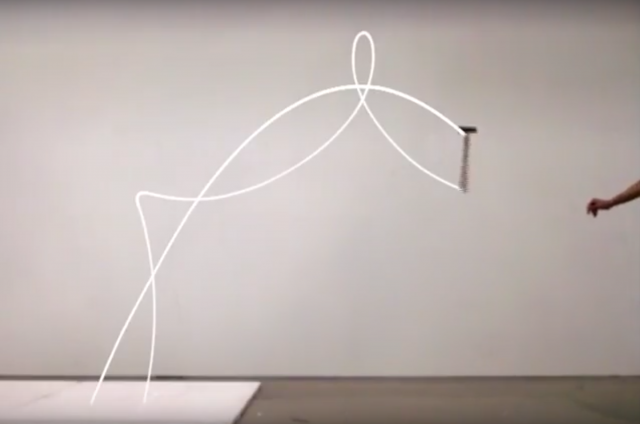

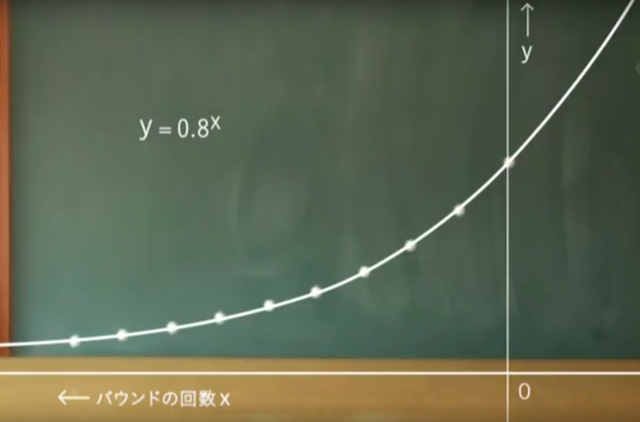

I then decided to apply this technique to show how mechanical trajectories actually occurred in the world, as Masahiko Sato already did in his “Cruves” video.

Masahiko Sato – Curves

The output would then be similar to the initial photo I showed, but with a moving camera allowing to see the ball more closely.

Process

Let me here explain my process in more details.

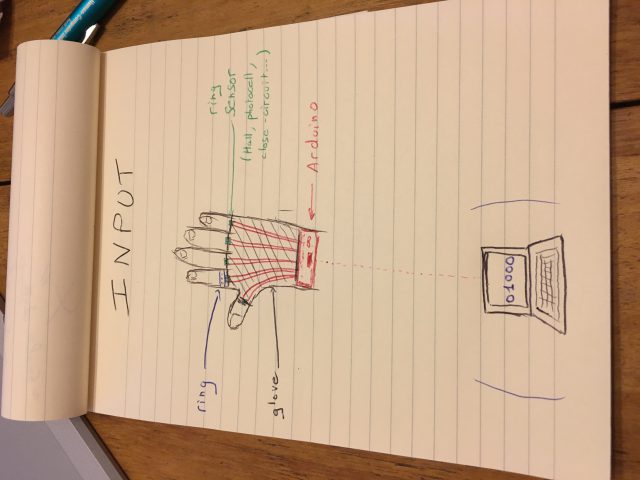

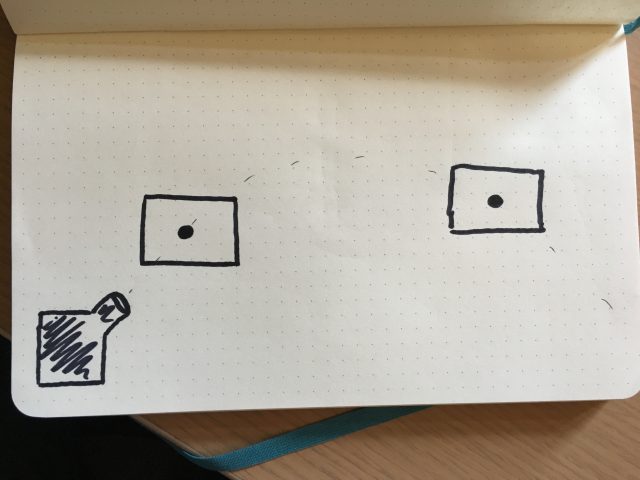

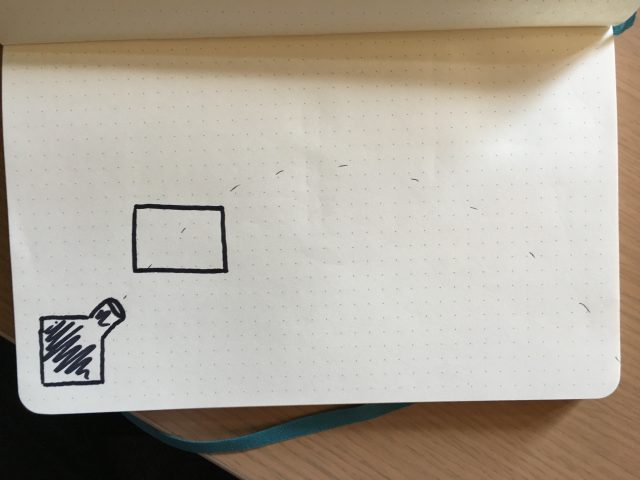

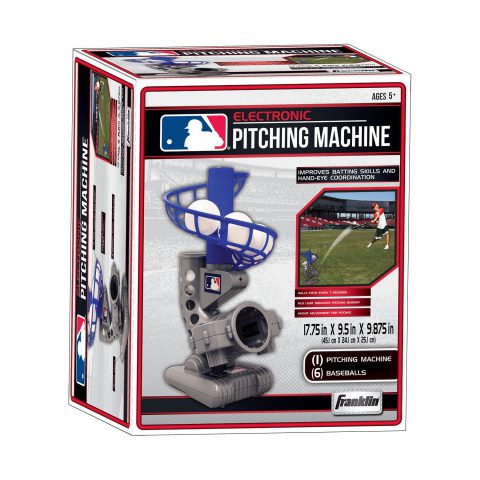

The first part of the setup would consist in having a ball launcher throwing balls that would follow a consistent trajectory.

The first part of the setup would consist in having a ball launcher throwing balls that would follow a consistent trajectory.

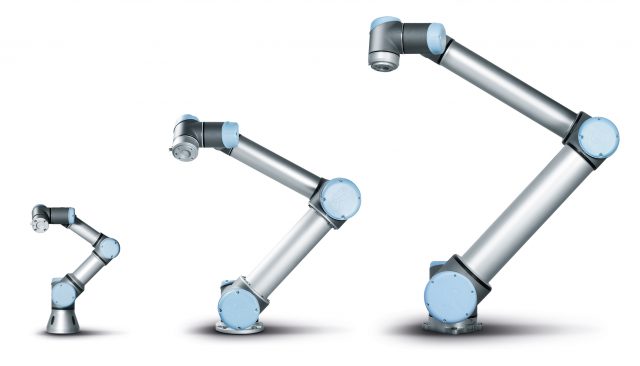

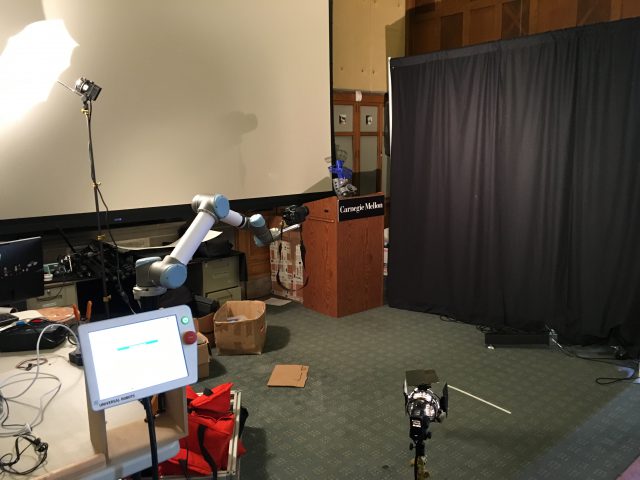

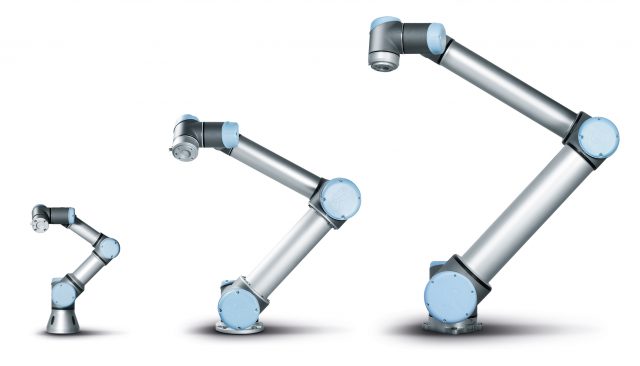

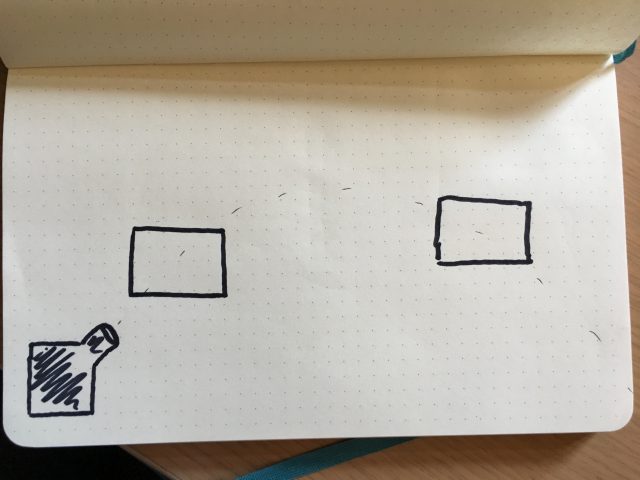

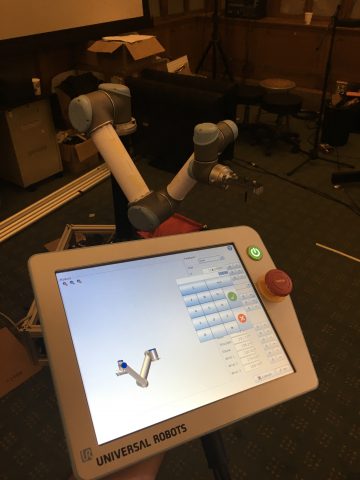

I would then put a camera on the robot arm.

I would then put a camera on the robot arm.

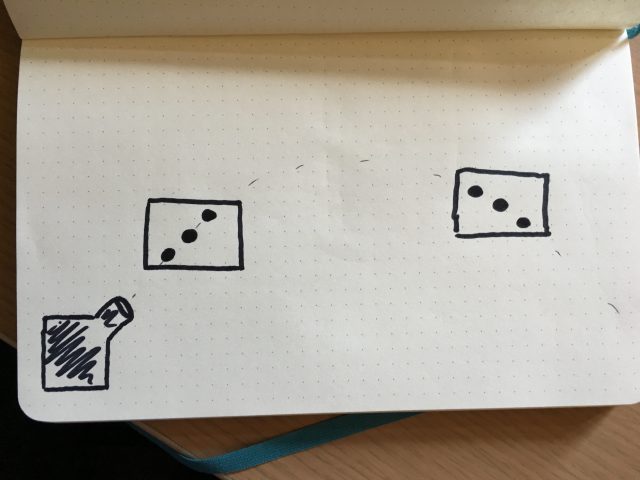

I will have the robot arm move in a loop that would follow the trajectory of the balls.

I will have the robot arm move in a loop that would follow the trajectory of the balls.

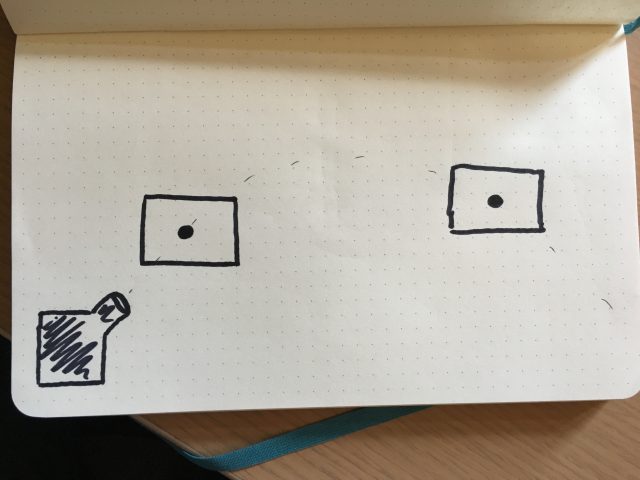

I would then throw a ball with the launcher. The robot (and camera) would follow the ball and keep it in the center of the frame.

I would then throw a ball with the launcher. The robot (and camera) would follow the ball and keep it in the center of the frame.

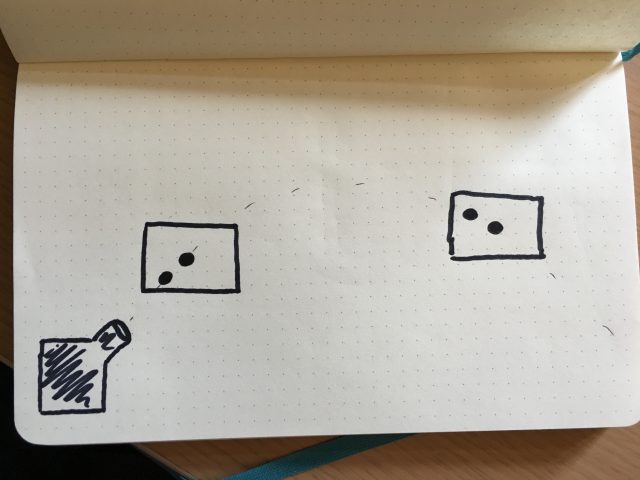

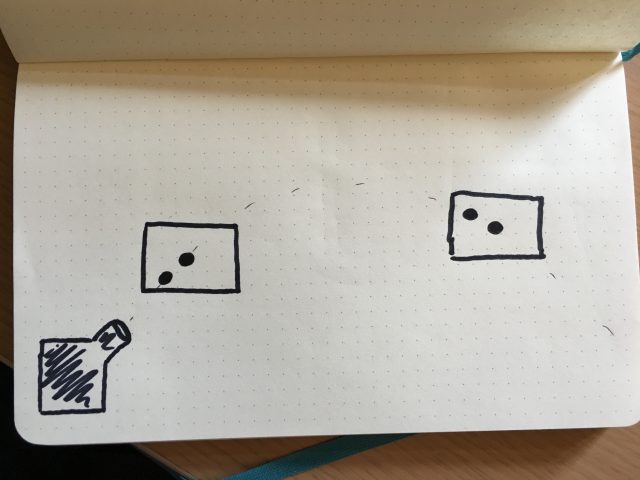

The robot would start another loop and another ball would be thrown, but with a slight delay compared to the time before. The ball followed would then appear to be slightly off the center of the frame.

The robot would start another loop and another ball would be thrown, but with a slight delay compared to the time before. The ball followed would then appear to be slightly off the center of the frame.

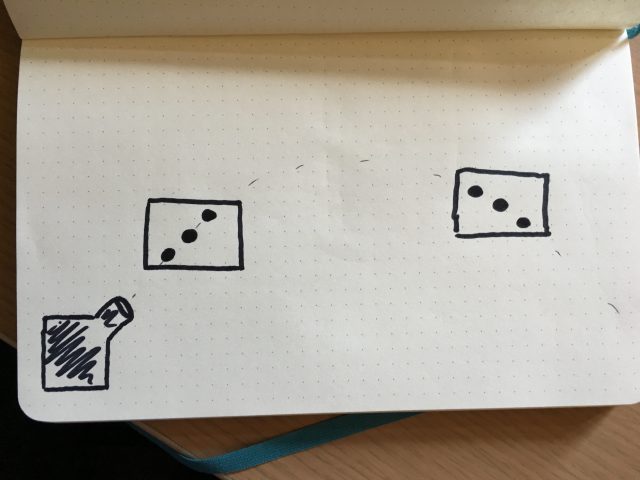

Repeating the process and blending the different footages would do the trick and the whole trajectory would appear to move dynamically.

Repeating the process and blending the different footages would do the trick and the whole trajectory would appear to move dynamically.

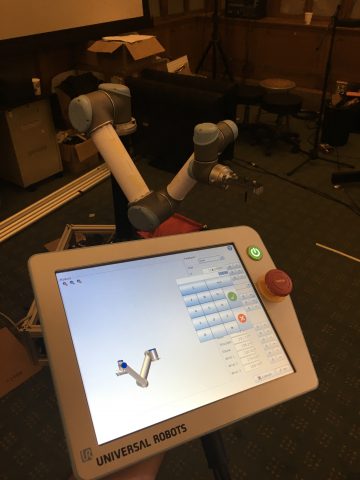

Robot Programming

My trouble began when I started programming with the robot. Managing to control such a machine implies writing in a custom language, using inflexible functions, with mechanical constraints that don’t allow to move the robot smoothly along a defined path. Moreover, the robot has a speed limit that it cannot go past and the balls were going faster than this limit.

My trouble began when I started programming with the robot. Managing to control such a machine implies writing in a custom language, using inflexible functions, with mechanical constraints that don’t allow to move the robot smoothly along a defined path. Moreover, the robot has a speed limit that it cannot go past and the balls were going faster than this limit.

I then didn’t manage to have the robot follow the exact trajectory I wanted but instead it followed two lines that approximated the trajectory of the balls.

Launcher

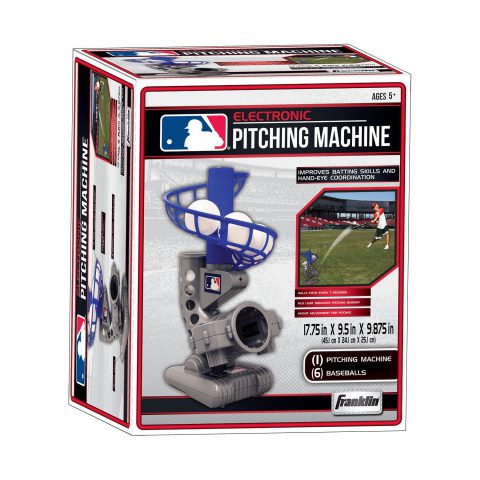

For the launcher, I opted for an automatic pitching machine for kids. It was cheap, but probably too cheap. The time span between each throw was inconsistent and the force applied for each throw was also inconsistent. But now that I had it I had to work with this machine.

For the launcher, I opted for an automatic pitching machine for kids. It was cheap, but probably too cheap. The time span between each throw was inconsistent and the force applied for each throw was also inconsistent. But now that I had it I had to work with this machine.

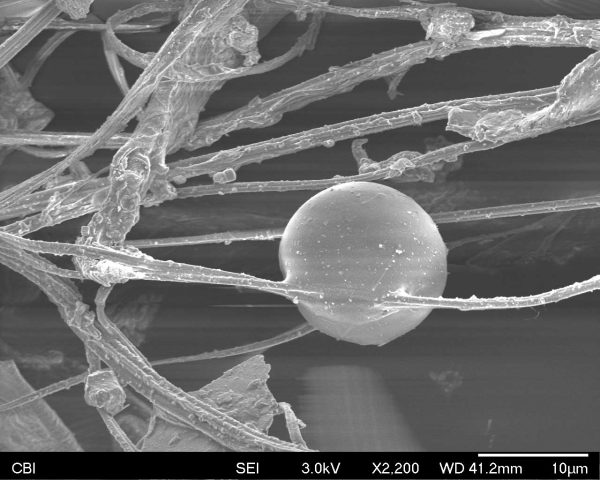

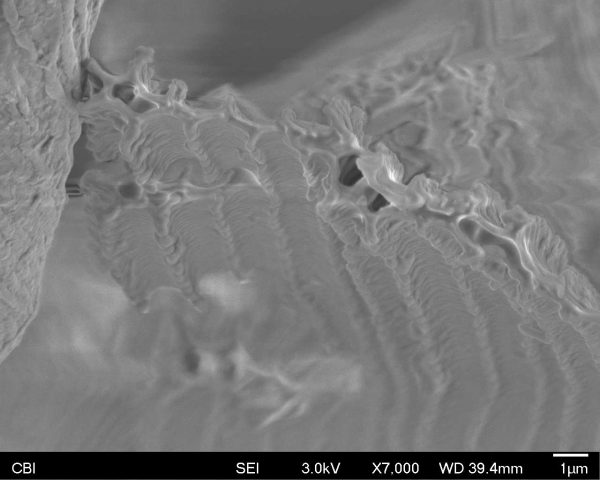

Choosing the balls

Choosing the right balls for the experiment was not easy. I tried using the balls that were sold on top of the pitching machine but they were thrown way too far and the robot could only move within a range of a meter.

I wanted to use other types of balls but the machine was only working for balls of a non-standard diameter.

The tennis ball where then thrown to close to the launcher.

I then start trying to make the white balls heavier but it was not really working.

I also tried increasing the diameter of tennis balls, but the throws were again very inconsistent.

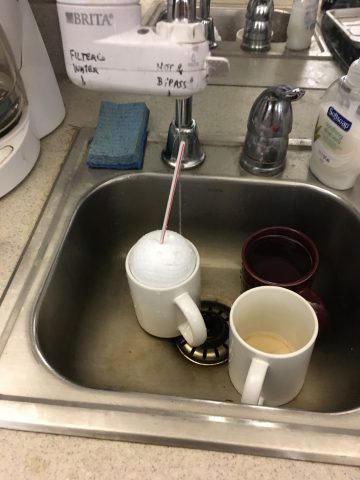

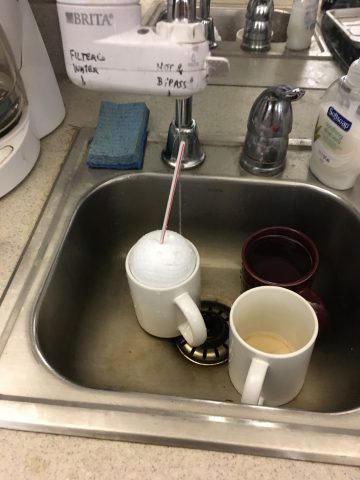

At that time I noticed a whole in the white balls and I decided to try and put water in them to make them heavier. The hole was to small to inject water with a straw.

I then decided to transfer water into it myself…

… Before I realized that there was a much more efficient and healthy way to do it.

I finally caulked the holes so that the balls wouldn’t start dripping.

Finally the result was pretty satisfying in terms of distance. However, the throws were still a bit inconsistent. The fact that the amount of water was not the same in each ball probably added to these variations in the trajectories.

Setting

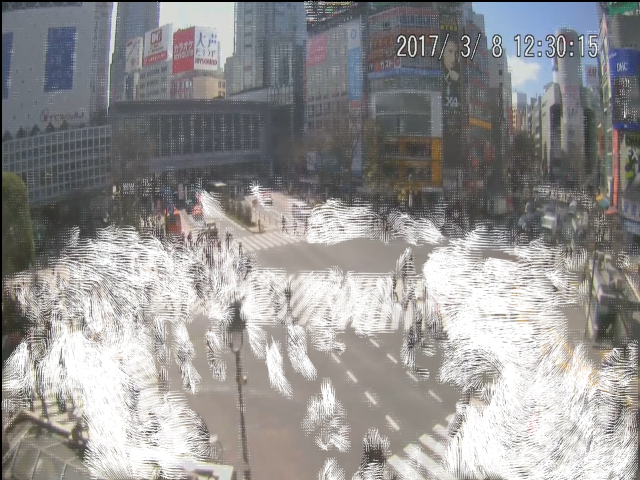

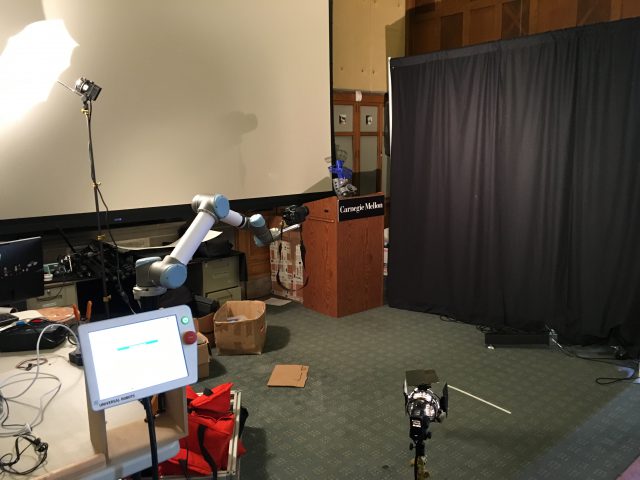

Here is the setting I used to shoot the videos for my project.

And here is what the scene looked like from a different point of view.

And here is what the scene looked like from a different point of view.

Results

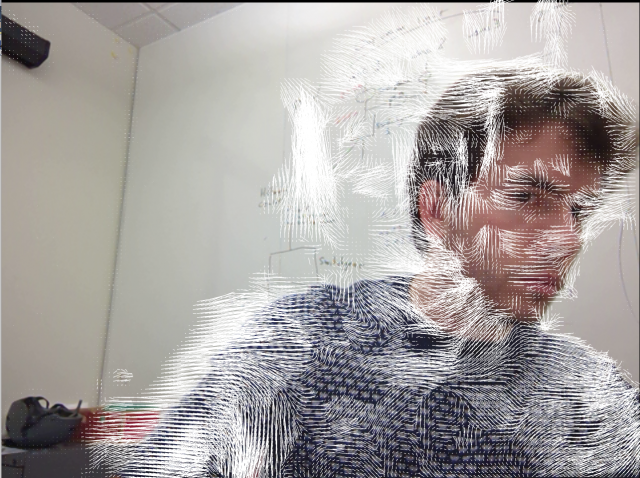

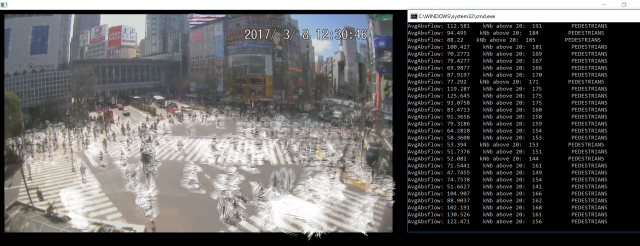

This first video shows some of the “loop footages” put one after the other.

The next videos shows the resulting video when footages are superimposed with low opacity, once the backgrounds have been carefully aligned.

Then, by using blending modes, I was able to superimpose the different footages together to show the balls thrown at the same time. The video below was then actually made out of one launcher, one type of balls and one human.

In this video, I removed the parts where someone was catching balls to give a sense of an ever increasing amount of balls thrown by the launcher.

Next Steps

- Tweak the blending and the lighting in the final video

- Try to make a video with “one trajectory”

- Different angle while shooting the video

- More consistent weights among the balls

The first part of the setup would consist in having a ball launcher throwing balls that would follow a consistent trajectory.

The first part of the setup would consist in having a ball launcher throwing balls that would follow a consistent trajectory. I would then put a camera on the robot arm.

I would then put a camera on the robot arm. I will have the robot arm move in a loop that would follow the trajectory of the balls.

I will have the robot arm move in a loop that would follow the trajectory of the balls. I would then throw a ball with the launcher. The robot (and camera) would follow the ball and keep it in the center of the frame.

I would then throw a ball with the launcher. The robot (and camera) would follow the ball and keep it in the center of the frame. The robot would start another loop and another ball would be thrown, but with a slight delay compared to the time before. The ball followed would then appear to be slightly off the center of the frame.

The robot would start another loop and another ball would be thrown, but with a slight delay compared to the time before. The ball followed would then appear to be slightly off the center of the frame. Repeating the process and blending the different footages would do the trick and the whole trajectory would appear to move dynamically.

Repeating the process and blending the different footages would do the trick and the whole trajectory would appear to move dynamically. My trouble began when I started programming with the robot. Managing to control such a machine implies writing in a custom language, using inflexible functions, with mechanical constraints that don’t allow to move the robot smoothly along a defined path. Moreover, the robot has a speed limit that it cannot go past and the balls were going faster than this limit.

My trouble began when I started programming with the robot. Managing to control such a machine implies writing in a custom language, using inflexible functions, with mechanical constraints that don’t allow to move the robot smoothly along a defined path. Moreover, the robot has a speed limit that it cannot go past and the balls were going faster than this limit. For the launcher, I opted for an automatic pitching machine for kids. It was cheap, but probably too cheap. The time span between each throw was inconsistent and the force applied for each throw was also inconsistent. But now that I had it I had to work with this machine.

For the launcher, I opted for an automatic pitching machine for kids. It was cheap, but probably too cheap. The time span between each throw was inconsistent and the force applied for each throw was also inconsistent. But now that I had it I had to work with this machine.

And here is what the scene looked like from a different point of view.

And here is what the scene looked like from a different point of view.