Pressure Classifier

Trained pressure classifier on hand and foot prints, captured through a pressure-sensitive, multi-touch input device.

Experimental Capture – Spring 2017

CMU School of Art, Spring 2017 • Prof. Golan Levin

Trained pressure classifier on hand and foot prints, captured through a pressure-sensitive, multi-touch input device.

Our event is stepping. We were inspired by the many tools that were shown during lecture, including the SenselMorph, openframeworks eye and face trackers, etc. We liked the idea of classifying feet, a part of our body that is often forgotten about yet is unique to every individual. Furthermore, we wanted to capture the event of stepping, to see how individuals “step” and distribute pressure through his or her feet. As a result, we decided to make a foot classifier by training a convolutional neural network.

First, we used Aman’s openframeworks addon ofxSenselMorph2 to get the SenselMorph working, and to display some footprints. Next, we adapted the example project from the addon so that the script takes a picture of the window for every “step” on the Sensel.

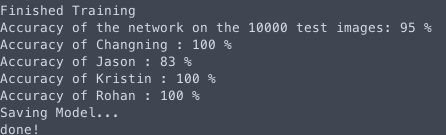

In order to train our neural network we want to get a lot of data. We collected around 200-250 train images for each individual our neural net would train on, and got 4 volunteers (including ourselves).

We used Pytorch, a machine learning library based on python. It took us a while to finally be able to download + run the sample code, but through some help from Aman we managed to get it to train on some of our own data sets. We ran a small piece of code through the sensel that can capture each foot print through a simple gesture. This allowed us to gather our data much faster. We used our friend’s GPU-enabled desktop to train the neural net, which greatly reduced our overall time dealing with developing the model.

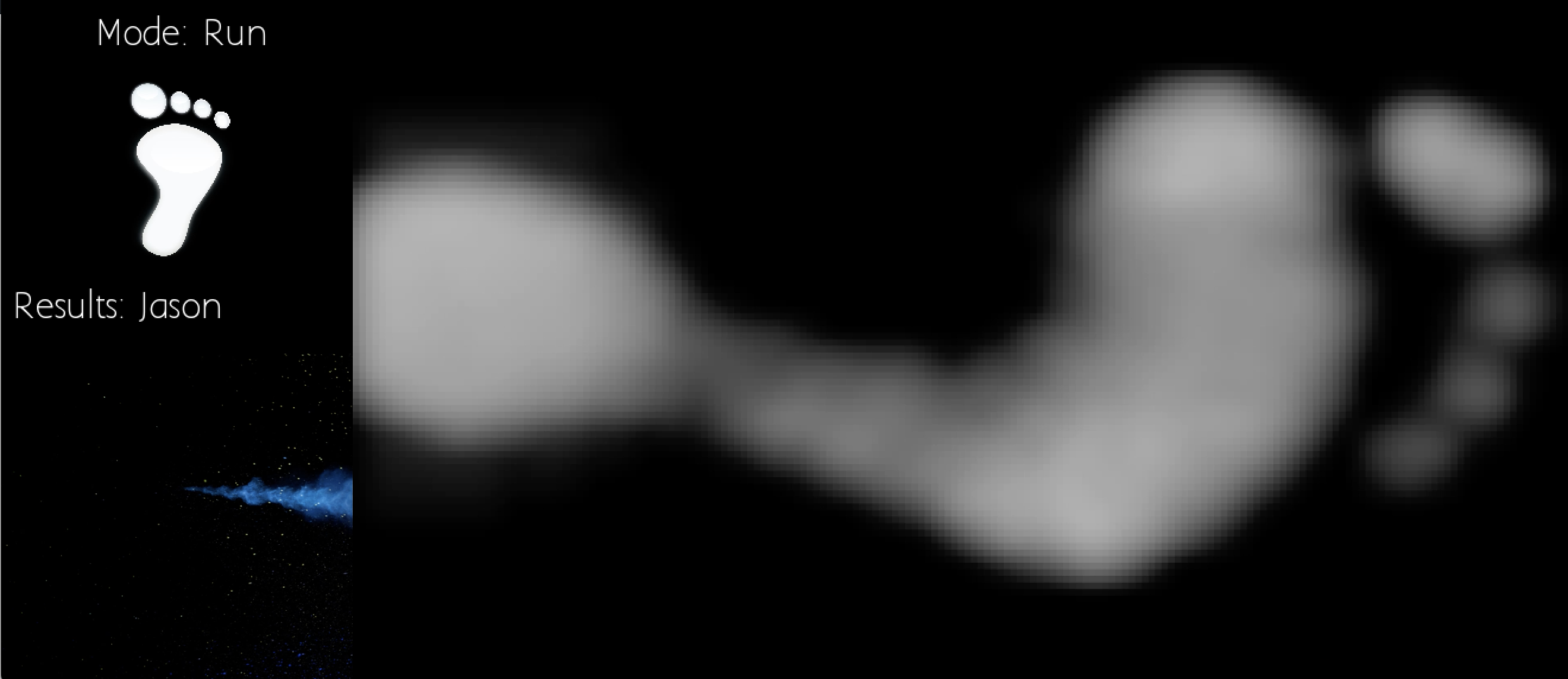

To put everything together, we combined our python script that given an image will detect whose foot it is with our openframeworks app. We created 2 modes on the app, a train and run mode, where train mode is for collecting data, and run mode is to classify someone’s foot in real time given a saved train model. On Run mode, the app will display its prediction after every “step”. On train mode, the app will save a train image after every “step”.

Overall, we were really happy with our results. Although, the app did not predict every footprint with 100% accuracy, about 85-90% of the time it was correct between 4 people, and this is with 200-250 train data for each person, which is pretty darn good.

We want to use the Sensel touch sensor to train footsteps in order to detect a person’s unique footprint. Our event is “being stepped on”, or walking.

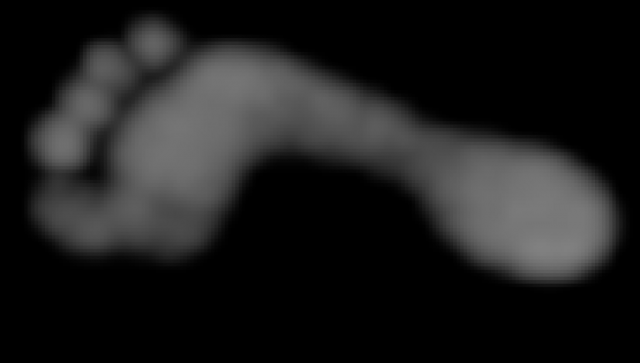

We are using image recognition/processing to train our neural net. In order to do that, we first need to get images footprints from the Sensel. We used Amon’s ofxSenselMorph2 to display the information the Sensel is receiving, and adapted the script to take a screenshot of the window on a specific key press.

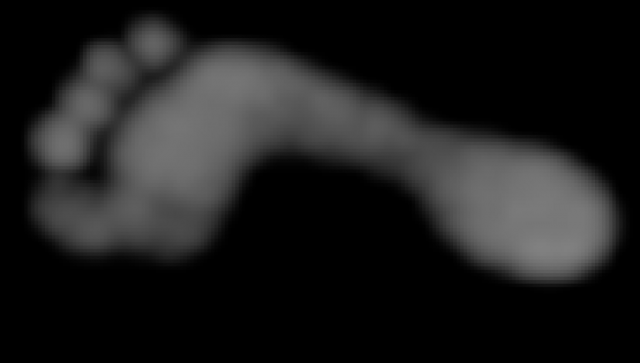

Kristin’s foot:

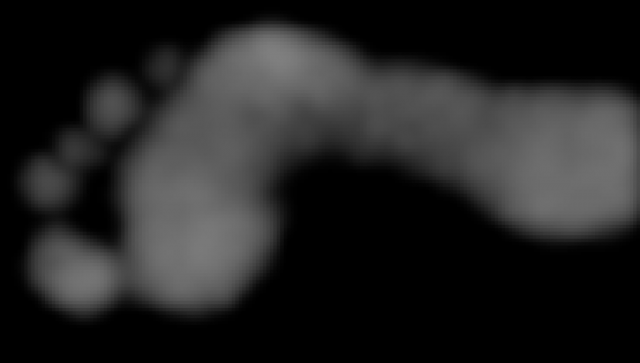

Jason’s foot:

We did some research regarding convolutional neural networks, which we plan to use in our feet classifier. We’ve done some research on which ones to use, and we’re currently looking TensorFlow as our main candidate. (If anybody has any better / easier cnn to use, we are completely open to suggestions).

My event is the moment when things break. I want to track the patters and paths of the pieces of broken objects. I am thinking of breaking many different objects and to capture how these objects break, perhaps with a 360 camera such that the camera is in the object originally (but I need to make sure the camera doesn’t break so this probably won’t work), or maybe taking a long-exposure shot of the process. My media object would be a series of photos of different objects breaking.

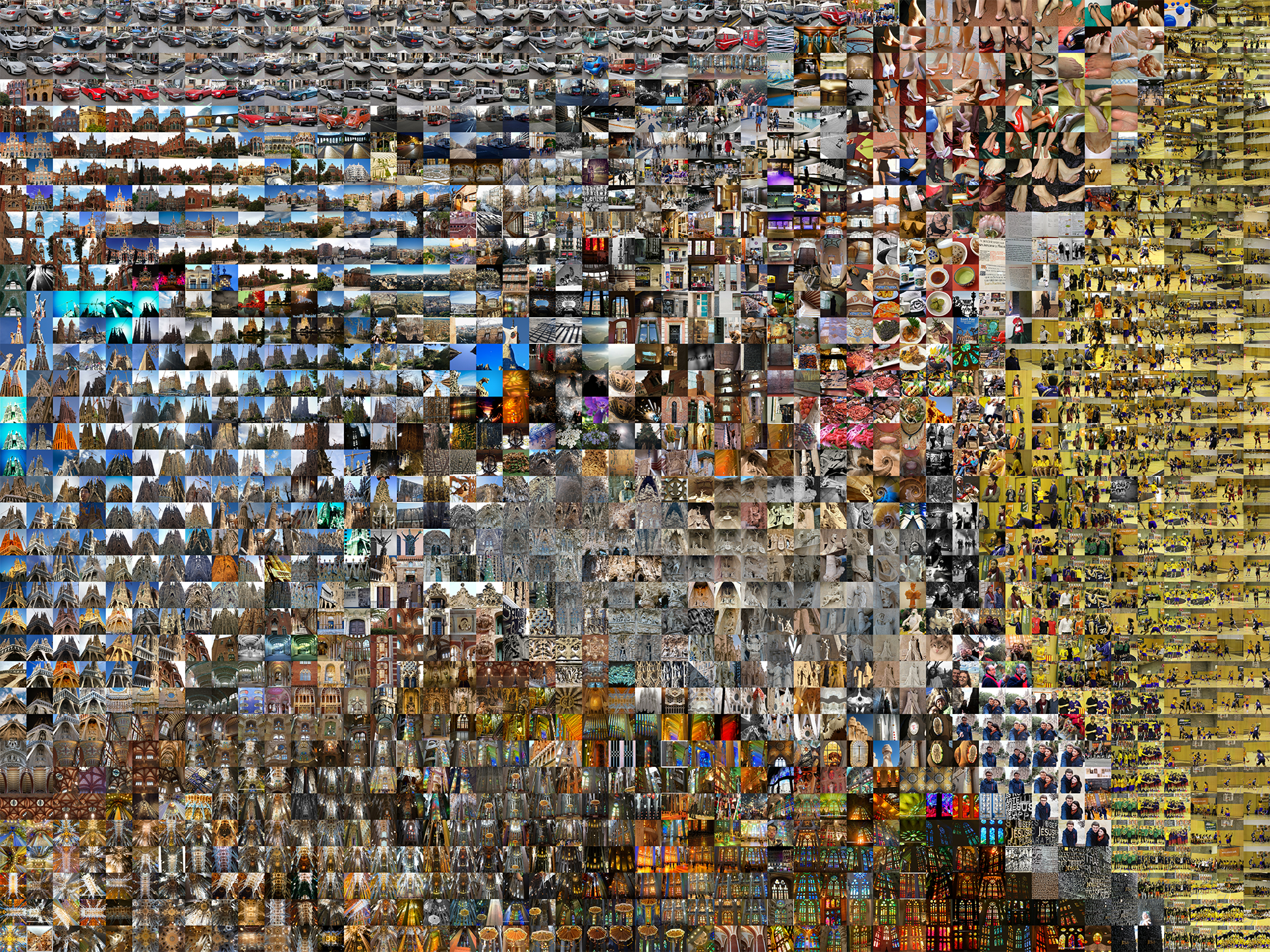

I was really inspired by Philipp Schmitt’s Camera Restricta, and wanted to use a similar method of crowd sourcing data while using geolocations. Therefore, I made a machine that given a latitude longitude coordinate and a picture taken at the location, will crowd source pictures that people have posted online and fuse them to create a single picture of the location.

First, I wrote a Python script using Temboo/Flikr API to extract all the picture information given a latitude longitude coordinate as input. I was able to get over thousands of images with one coordinate if the location is a popular spot, which was exactly what I wanted. Next I wrote another Python script to download all these images and save it somewhere in my laptop. However, I realized that it wasn’t realistic to use all the images at one location, since, people take such drastically different pictures. I had to figure out a way to pick our the pictures that looked closest to the “picture I am taking”. With Golan’s help, I was able to use the Openframeworks add-on ofxTSNE to create a grid that sorts all the photos by similarities.  Finally I used a 7×7 grid to find the closest 49 pictures in the TSNE grid from a given image (ideally the image that I take), and put these batch of pictures into Autopano.

Finally I used a 7×7 grid to find the closest 49 pictures in the TSNE grid from a given image (ideally the image that I take), and put these batch of pictures into Autopano.

The results turned out better than I thought. Some were fairly normal, and just looked like a shitty panorama job, and others turned out super interesting. Below are some results with anti-ghost/simple settings.

Overall, I really enjoyed working on this project and was really impressed with my results. Since I’ve never worked with Autopano, I did not know what to expect. Therefore, it was very rewarding to see the results of this experiment, which turned out to be better than what I expected. Some things that I hope to do to expand this project is to create an actual machine that can do this in real time, and figuring our a more efficient way to filter through the pictures.

I was inspired by Philipp Schmitt’s Camera Restricta who used geotagging to make a camera that only allows the user to take pictures of locations that has not been taken by other people. I liked the idea of crowd sourcing images from the internet, while using geotags to create a machine or process that can track your location.

My project will use geotagging and perhaps a GPS to track your location, and compile images of what other people has taken in the specific location you choose to take a picture at. So instead of taking a picture through your own lenses, you will take a picture through the lenses of all the people around the world who visited the place you are at. I am still unsure how exactly I want to display the final image, maybe put them through autopano or some other image processor. I am also debating on what the machine will look like physically, either using a Raspberry pi or making it mobile.

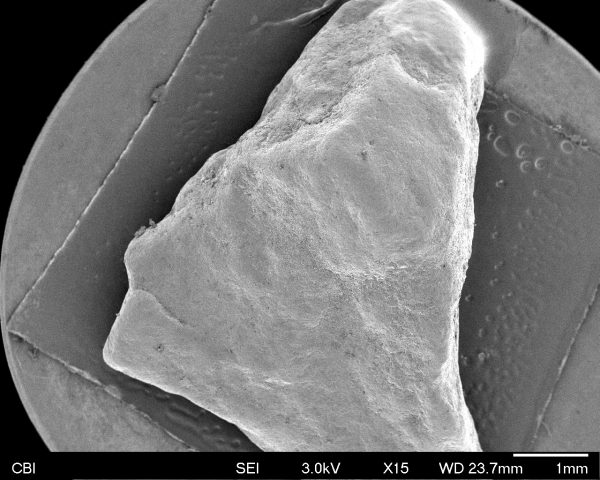

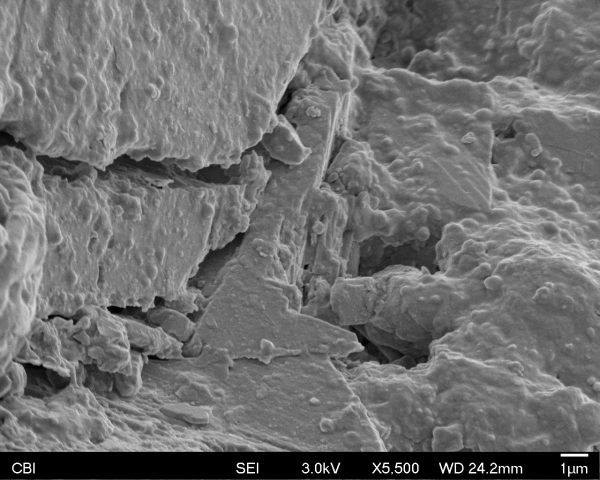

I really enjoyed getting to see things from a view the naked eye could not. I decided to choose something ordinary, because I was curious to see if we could find interesting figures behind very common objects. So I chose a piece of rock I picked up on Forbes near Craig Street. It was interesting to find that the rock had both very rectangular structures and very round structures like the 2 pictures shown below. I also found it very interesting to find different “landscapes” on the surface of the rock. Overall, I was very happy with my results. Hopefully, I will have an opportunity next time to bring in even more interesting objects to see!

Hi everyone! I am a BCSA sophomore studying visual arts and cs. Back in high school I was more of a watercolor/prismacolor drawing kinda person, but now I am more interested in exploring new media especially looking into electronic media such as unity, writing code, computer vision, AR/VR, etc etc. And I am really excited to learn a lot in this class and use technology & code to make art =)