For this final project, Bernie and I decided to tackle cinematography with the robot arm, a step beyond attaching the light. In the end, we created two single-path, multiple-subject clips.

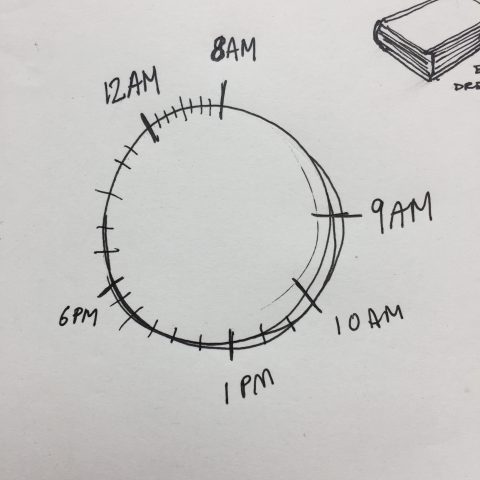

The robot arm operates on a waypoint system, set manually. Waypoints are the positional and directional points through which the camera travels. Once set, the operator then sets the speed the robot moves at, and how smooth the motion is (blending between waypoints).

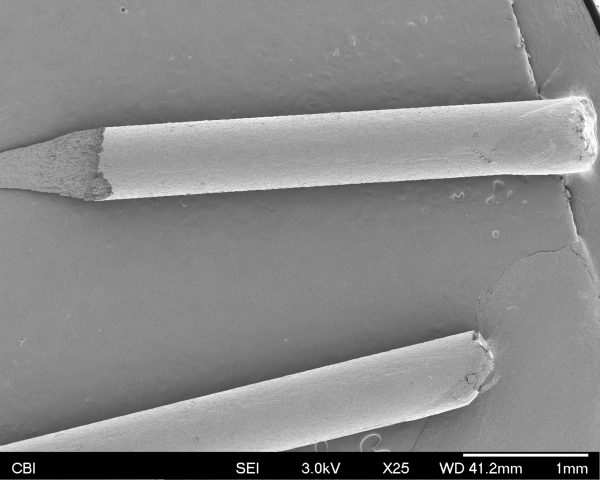

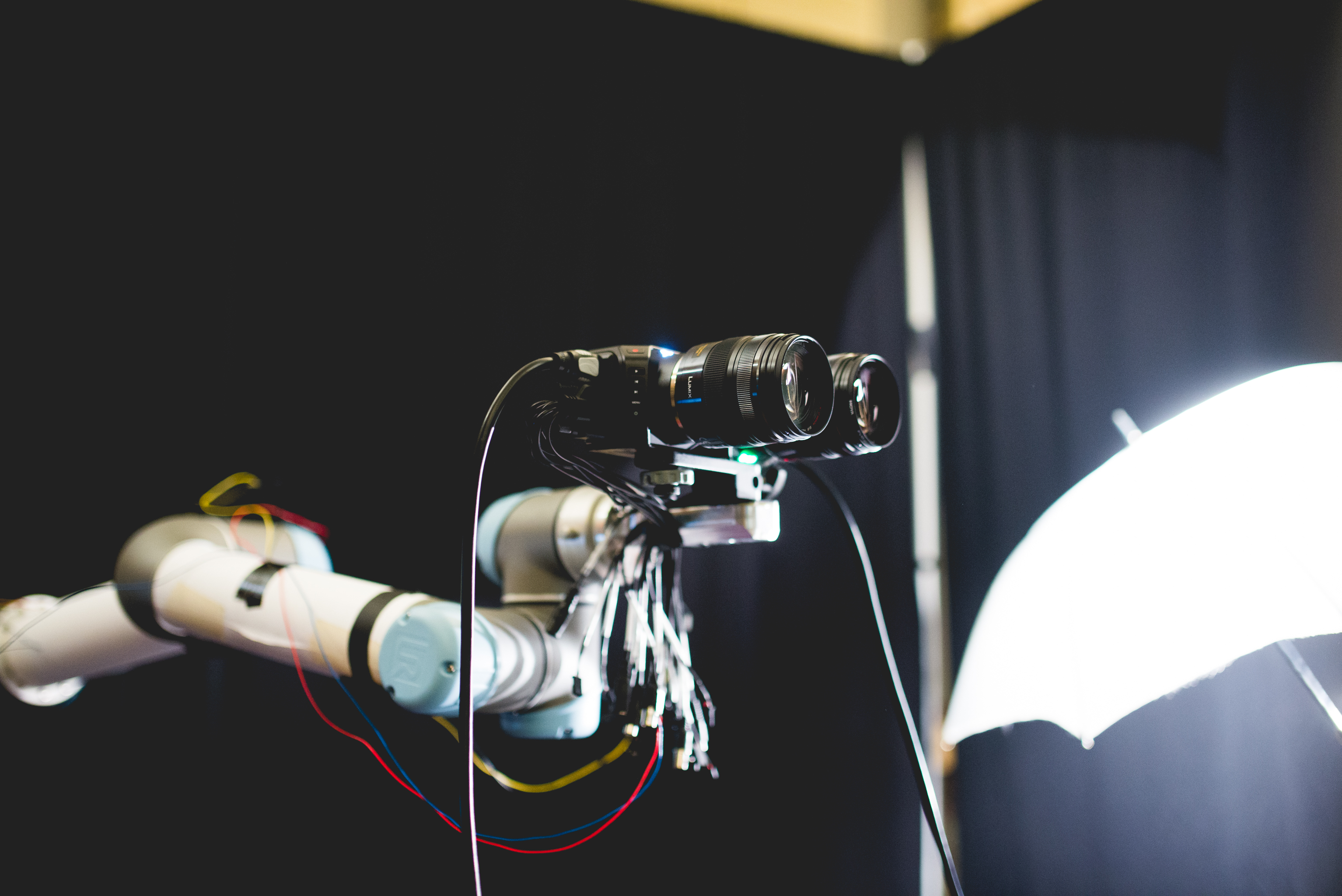

We initially had a steroscopic setup on the arm, to capture video with depth.

However, we soon realized that our setup afforded a fixed convergence angle between the two cameras. This meant that either the subject had to be always a fixed distance away from the cameras, or the video would be at a permanent cross-eyed state. Because we were low on time, and these constraints were too stifling, we ditched the second camera and moved on.

As you can see, the video doesn’t converge properly as it should, because the cameras were not dynamically converging and diverging at different distances.

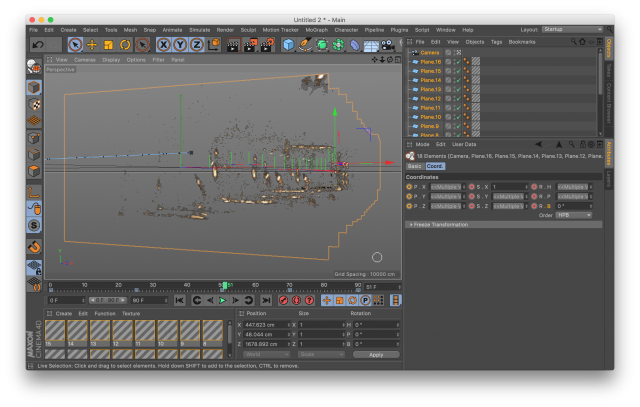

During this project, Bernie and I got a hold of the Olympus 14-42mm lens, which has electronic focus and electronic zooming capabilities. So now we had computational control over all camera elements simultaneously—camera position, direction, aperture, shutter angle, ISO, focus, and zoom. We had created a functional filming machine.

A beautiful aspect of the robot arm is the capability for the path to be replicated. Once we set a series of waypoints, the robot arm can travel in an identical way over and over, as many times as we want.

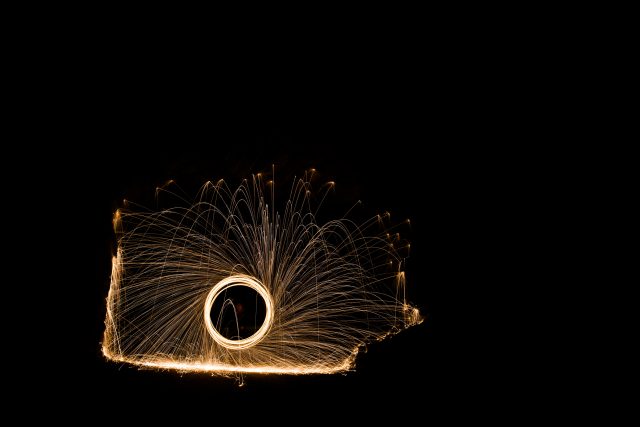

These are four subjects filmed in the exact same way (path, light, zoom, position).

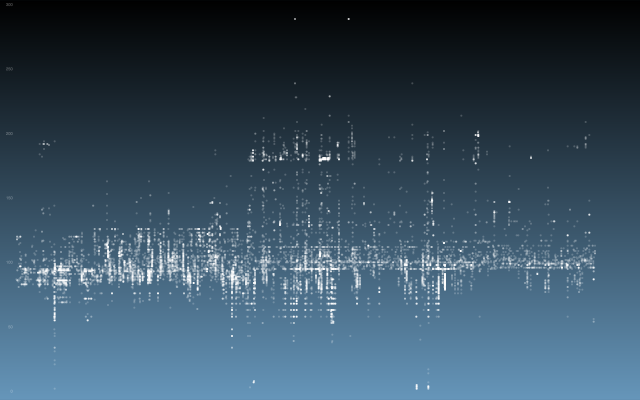

With this repeatability, we are able to have interesting transitions and combinations between clips. We explored two different methods: splicing and positional cuts.

This is an example of a spliced video.

Since all four subjects are all filmed in the same way, they should be perfectly aligned the whole time, but they are not. This is due to human difference, which gets worse and worse as the video goes on.

This is an example of a positional cut.

As long as the paths are aligned, the cut should have an interesting continuity, even with a different subject.

Here are our final videos.

BODIES from Soonho Kwon on Vimeo.

Temporary Video (not final)—

Faces from Soonho Kwon on Vimeo.