Premise/Summary

I started with the idea that I was going to go to a graveyard to get scans of graves to turn into music. This eventually evolved into scanning the Numbers Garden at CMU with Ground Penetrating Radar with thanks to Golan Levin, the Studio for Creative Inquiry, Jesse Stiles, and Geospatial Corporation. I then compiled these scans into music which I then placed into a spatialized audio VR experience.

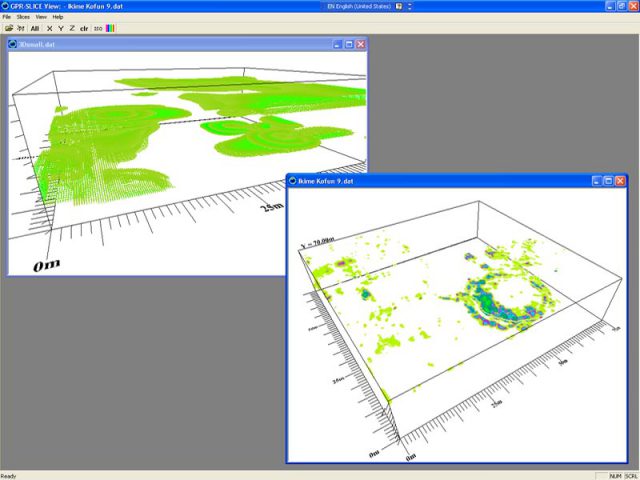

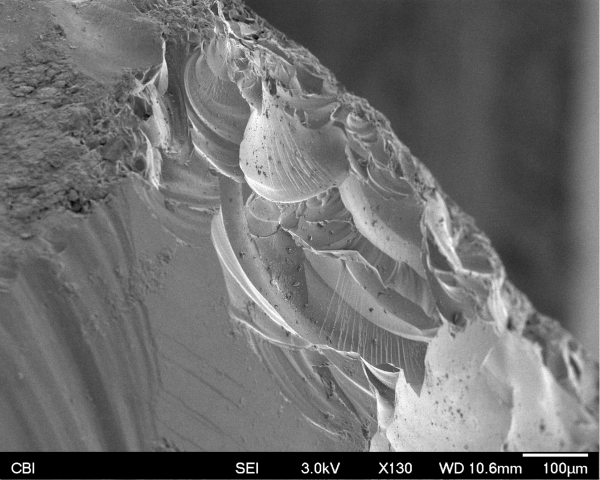

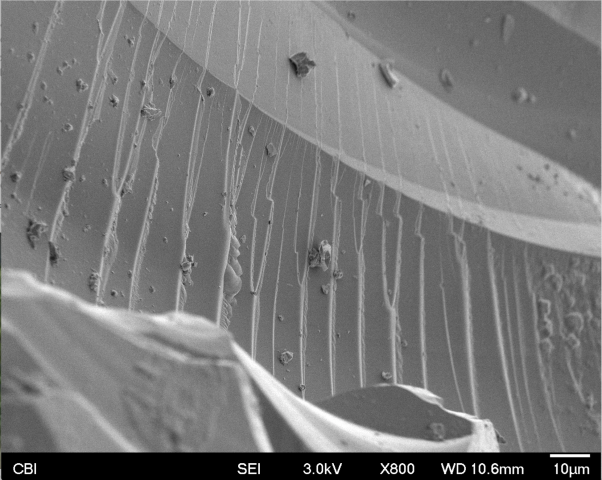

Ground Penetrating Radar Overview

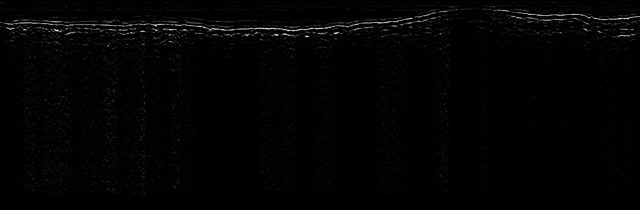

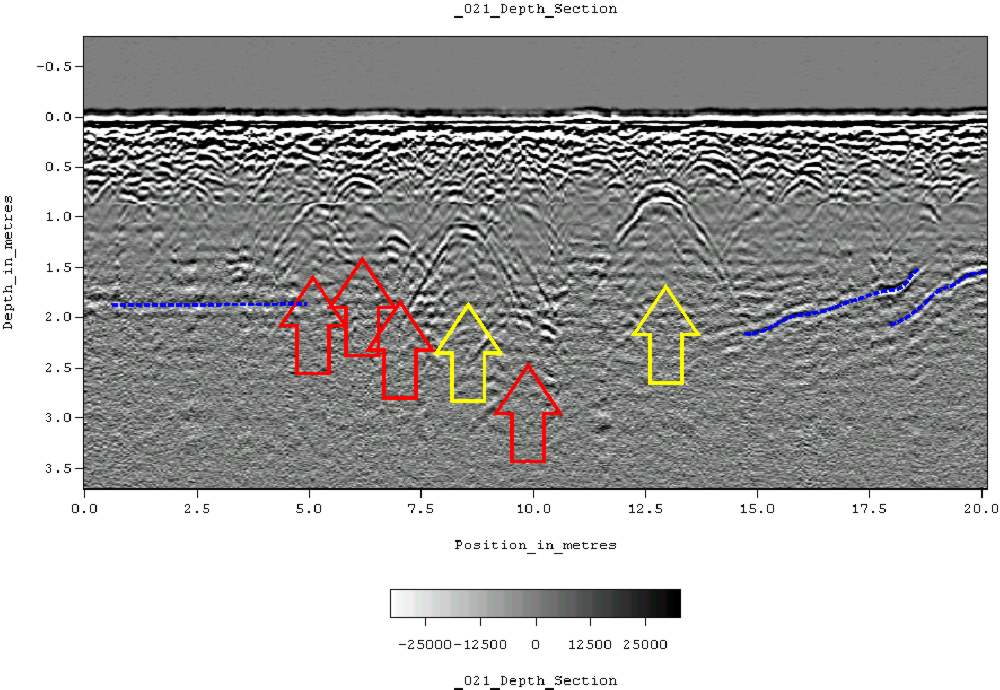

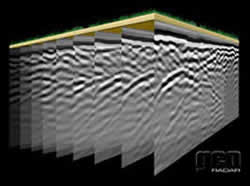

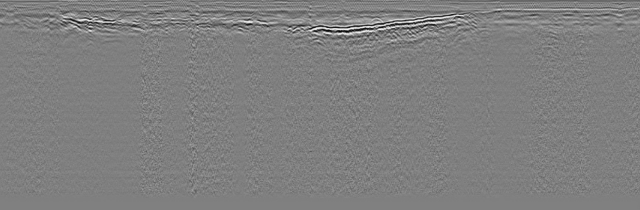

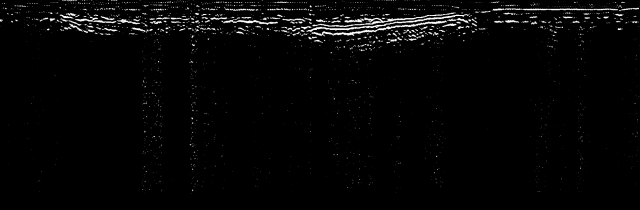

A short description is GPR works basically by reflecting pulses of radar energy that are produced on a surface antenna. This then creates wavelengths that go outward into the ground. If an object is below ground, it will bounce off that instead of merely the ground, and will travel back to the receiving antenna at a different time (in nanoseconds). The most important type of data that you receive from GPR is called a reflection profile and looks like this:

Essentially by finding the aberrations in the scan, one can figure out where there were underground objects.

History of CMU/Scanning With Geospatial

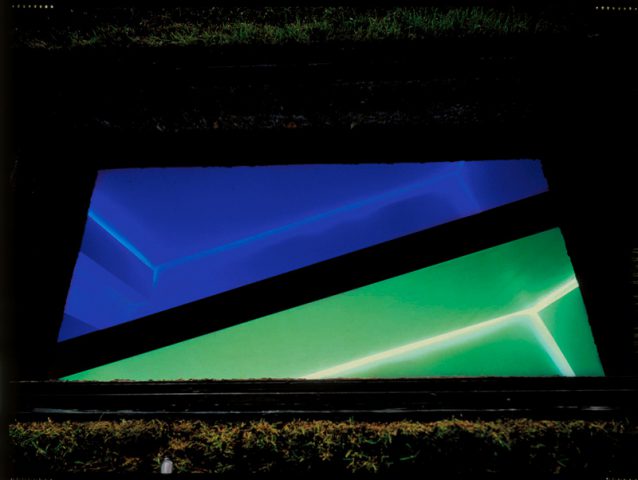

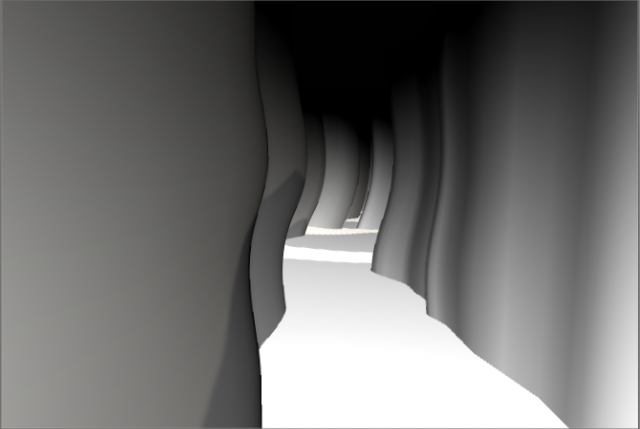

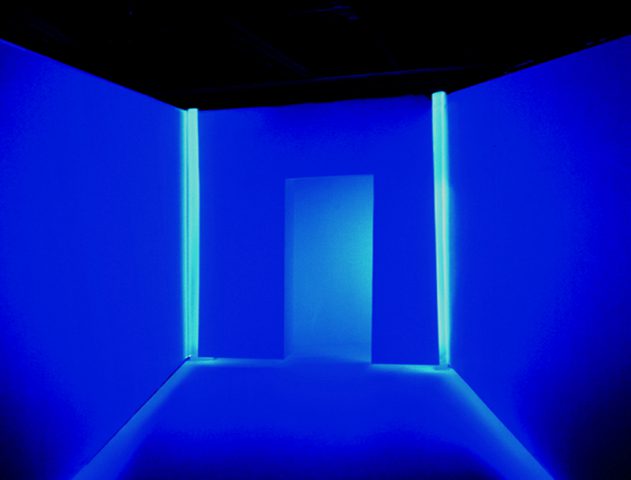

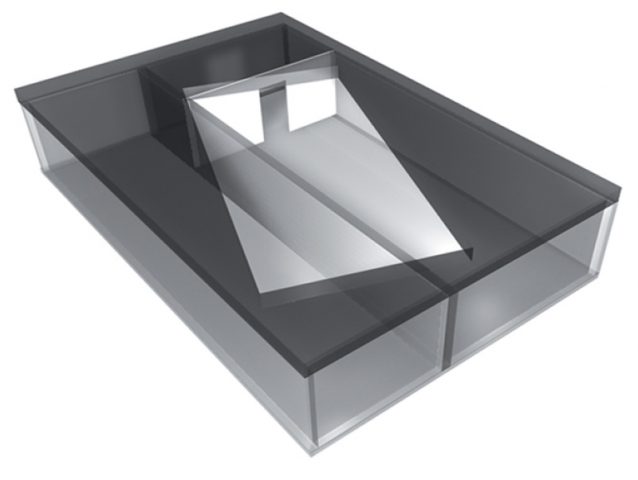

One of the things that we scanned was the buried artwork Translocation by Magdalena Jetelová. This was an underground room that was put underneath the cut in 1991. I talked with the lovely Martin Aurand (architectural archivist of CMU) who told me some of the stories about this piece. In the late 80s/early 90s, a CMU architecture professor that was beloved by many of the staff had died in a plane crash on her way to Paris. To honor her, the artist Magdalena Jetelová created a room beneath the cut in a shipping container, with lights and a partition. There was a large piece of acrylic on top of it so that you could actually walk around on top of it. This artwork was buried somewhere around 2004 however, as water had started to leak in and ruin the drywall/fog the acrylic. Most people on campus don’t know that it exists.

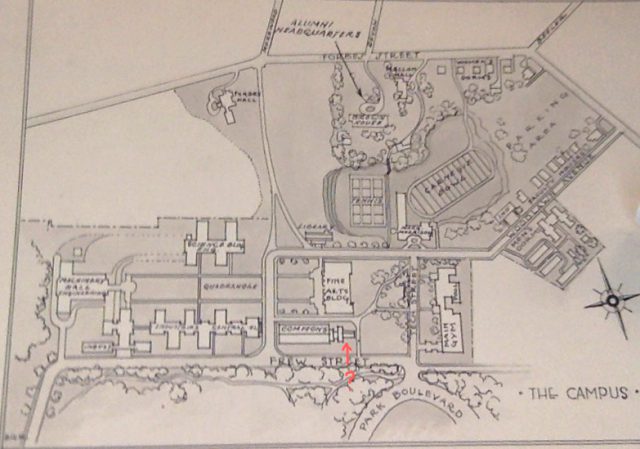

Another area that I explored was the area by Hunt Library now known as the peace garden. This used to be a building called Langley Laboratory (although this was often labeled Commons on maps). I went and visited Julia Corrin, one of the other archivists on campus to look through the archives to find old pictures of CMU. One part of Langley Laboratory in particular caught my eye as it was a small portion that jutted off the end that appeared in no photographs except the aerial photos and plans. Julia did not actually know what that part of the building was for and asked me to explore it. After looking through the GPR data, I don’t believe any remnants of it remained. It is likely that the building’s foundation was temporary/were completely removed for the creation of Hunt Library.

The last big area I explored was the Number Garden behind CFA. This area was interesting particularly because Purnell Center is immediately below it. This was particularly interesting to scan as we could see the ways that the underground ceiling sloped beneath the ground we were walking on/the random pipes and electrical things that were between the sidewalk and the ceiling.

The people at Geospatial were amazing partners in this project and went above and beyond to help me and our class learn a lot about GPR and its uses.

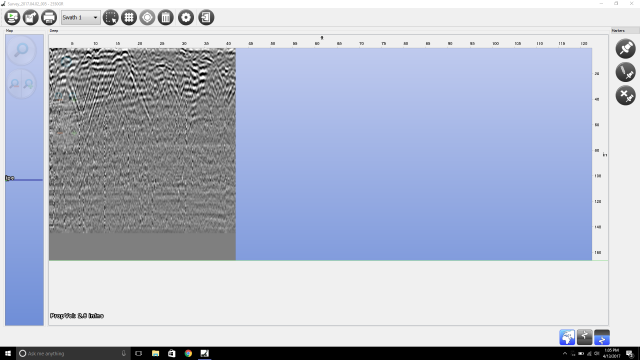

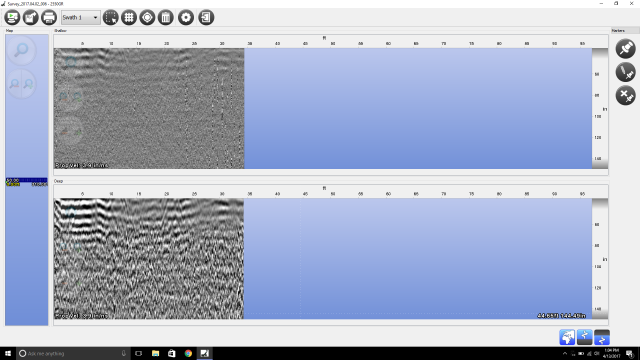

Hearing the Ground

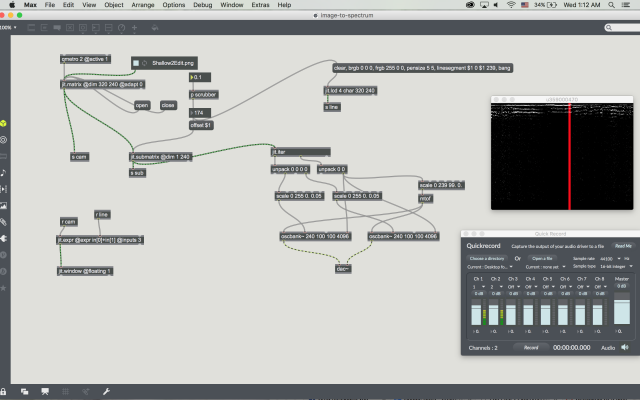

After scanning, I used SubSite’s 2550GR GPR Software to get the scans from .scan files into a more standard image format. I went through them all and organized each swath of scans into folders based on what part of the scan it was, whether it was the shallow or deep radar scan, pitch range etc. I then took the swaths and put them into photoshop and edited the curves and levels so that I could filter out most of the noise/irrelevant data. I put these edited photos into a Max MSP patch. This patch would take an array of pixels and depending on each pixels color/brightness, it would assign a pitch to that pixel. I did this for both the deep and shallow scans which I used as bass and treble respectively. I then combined all the different swath’s audio clips for the deep and shallow to make two separate pieces which I then joined together at the end in Audacity.

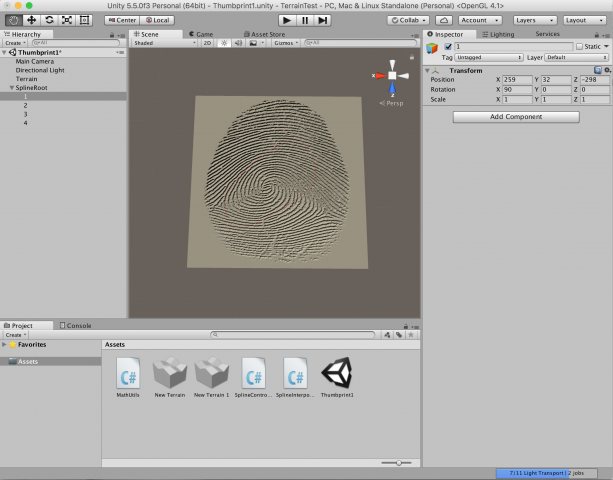

Spatialized Audio with Scans in VR

One of the later portions of the project was putting this audio into a spatialized VR project. I used splines in Unity to map our path in the Numbers Garden. I then attached the audio source to it so that the audio would travel through the Vive space in the same pattern as we did while scanning. I put a cubes on the audio source so that it would be easier to find. I created two splines (one for the bass/deep and one for the treble/shallow) and put them accordingly in the space with the bass being lower to the ground. I then used the occulus audio sdk to make it so that the participant would be able to find the audio source merely by moving their head. I finished by writing a few scripts that allowed me to have the scans as spinning, slightly pulsating skyboxes. Another script changed the scans when that swath of the scan’s sound had ended.

Continuation

I am really hoping to continue this project over this coming summer and next year. I hope that I can scan more places to hopefully create isosurface profiles. These I could then use so that in every single place in the Vive area, there would be a separate sin wave that would correspond to the Isosurface model in a similar fashion. By moving around the space, this would allow participants to physically hear when they are moving their head near an object.