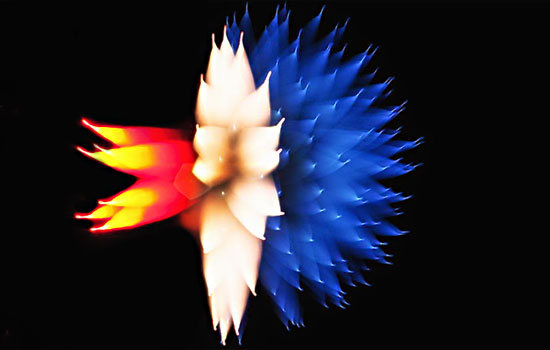

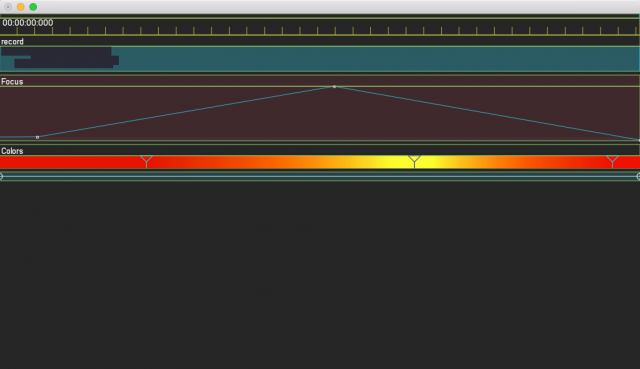

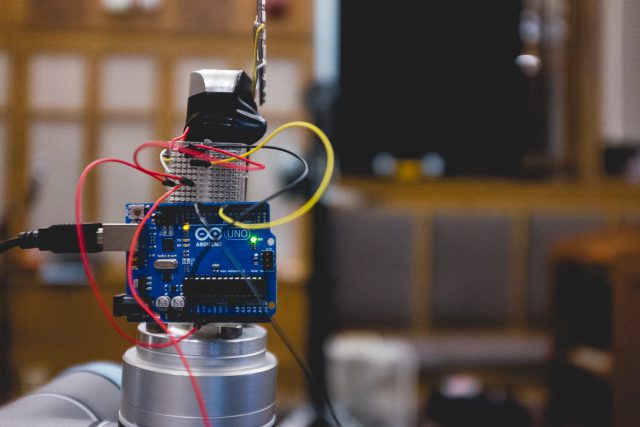

As a final experiment to working with robots and cameras, Quan and I decided to do a few experiments with putting the Black Magic camera on the robot this time. Many have done robotic cinematography, by simply using a robotic arm to maneuver the camera in specific ways. Our technical endeavor was to create interesting cinematic effects using the motion of the robot arm around objects while simultaneously controlling the focus and the zoom. I wrote a OpenFrameworks app using the ofxTimeline addon to computationally control the focus and zoom of the black magic camera with an arduino.

Our inspiration for creating cinematic effects entirely computationally using the robot arm came from watching Hitchcock dolly zoom effect videos. If all he had was a dolly and manual zoom, we were interested to find out what we could do with a robotic arm and computationally controlled zoom and focus.

Our first attempt was to create stereo video using two BM cameras. After filming quite a few scenes using computationally controlled focus and two cameras, we realized that shooting stereo video is not as simple as putting two cameras eye-distance apart. We noticed very quickly after creating the first stereo video and putting it on a google cardboard that whenever the cameras moved away from an object, the focal point needed to be further, and the cameras should have turned away from each other. Inversely, when an object got closer, the cameras needed to shorten the focal point and angle inwards towards each other.

After our in-class progress critique, our main feedback was that the capture technique is great, and that we already had a great way of capturing video – one camera, one robot arm, computationally controlled zoom and focus – but we needed to derive meaning from the objects we were shooting. Our project needed a story. We had considered doing portraits before, but the class reinforcing that portraits would be the most interesting way to use this tool made the decision for us. We moved to portraiture.

The Andy Warhol screen tests were our inspiration for this next part of the project:

We liked the idea of putting people in front of the robot arm while we explored their face using this unusual method. We had our subjects stare at one spot and took the same three minute path around each of their faces. For three minutes they had to sit still while this giant arm with a camera explored their faces very intimately. The results were pretty mesmerizing.

We also wanted to do a similar exploration of people’s bodies. We created a path with the robot that would explore all of a person’s body for 4.5 minutes. It would focus in and out of certain parts of people’s bodies as it explored. During this examination we had our five subjects narrate the video, talking about how they felt about those parts of their bodies or how they felt about the robot getting so intimate with them. We got a lot if interesting narrations about people’s scars, insecurities, or just basic observations about their own bodies. It ended up being a very intimate and almost somber experience to have a robot look at them so closely.