As we all know, I’ve been playing with cameras and a robot all semester. My inspiration for using a robot to do paintings with light came from Chris Noel who created this KUKA light painting robot for Ars Electronica.

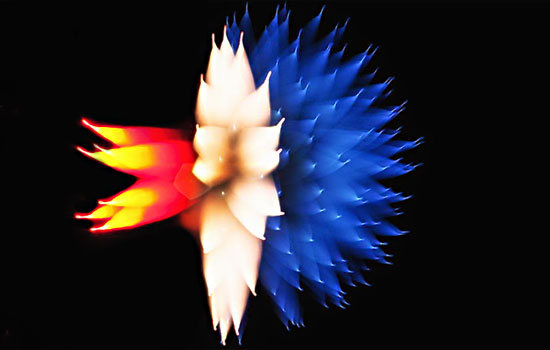

Since painting and animations has already been done, my partner Quan and I decided to still use the robot to light paint, but light paint using computational focus blur. Quan is the designer, and I am the programmer, so we had very distinct roles in this project. This truly was an experiment since neither of us knew what to expect. All we had seen was these pictures of fireworks being focus blurred by hand:

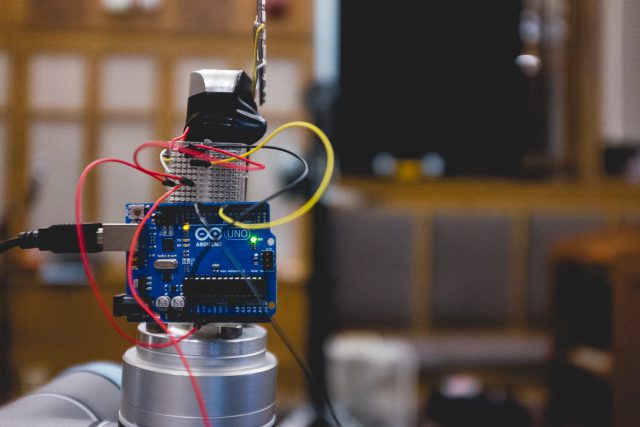

In my original endeavor to computationally control focus was to use the Canon SDK, which I have used before to take pictures, but controlling the focus turned out to be much more complicated. Then we decided to try a simpler solution of 3D printing one gear to put around the focus ring of a DSLR, and one to put on the end of a servo and control the focus ring with a servo. This was a solid solution, but a cleaner one ended up being to use the Black Magic Micro Cinema Camera. This is a very hackable camera that allowed me to computationally control the focus blur with a PWM signal.

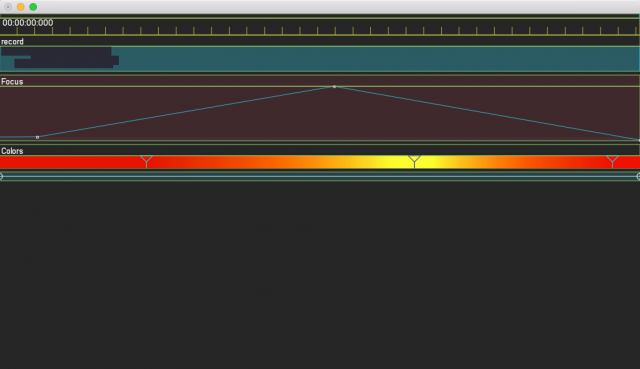

Then I created an app using ofxTimeline to control the focus of the BMMCC and the colors of an LED that was attached to the end of the robot arm. The robot arm would then move in predetermined shapes as we computationally controlled the focus. Focus blur is usually done manually and on events that cannot be controlled, like fireworks. This was an entirely controlled situation that we could play with every aspect of, because we controlled every variable. Quan then used Echo in Aftereffects to average the frames and create these “long exposure” images.

The first tests we did were with random focusing, and they looked interesting, but they also looked computer generated. In the second shoot, we aimed to integrate the streaks with real objects.

Test Shot:

App:

Final Setup:

Final Gifs:

Outline of a reflective object:

Reflected by a Mirror

Through a Glass Bowl

Through a Large Plastic Container

Anonymous feedback from group critique, 4/13/2017:

Computational Focus Blur – using robot arm/ofxTimeline/aftereffects. Inspiration- How to capture fireworks (for example)? Picasso light painting experiments – on ice skaters. Or taxidriver (michael chatman). When it plays against reality – it stops being an apparatus and becomes more of a composition.

You should be be clear that you made one timeline program that simultaneously controls (1) the robot arm, (2) the camera focus, and (3) the color and intensity of the light. I wish you had a screenshot of the timeline app that has all of those different staves (robot + focus + color).

Charlie asked “what did you like”. I think he’s saying, “this is promising, but none of these images yet reveal the obsession of someone who is fascinated with the results.” I think you need to get there.

You should use the terms “time lapse” and “light painting” in your discussion, I’m surprised you didn’t

Love this set of four gifs

Wonderful choice to include objects which refract the light into novel shapes

What is the concept behind your project?

I think they explained their concept, they want to look at different ways of perceiving light, “splitting reality” → yes, but it can be pushed further or at least explained with more detail

Look at the work of German photographer Boris Eldagsen. He sets up so many tricks with light like you are doing- his methods are all super low-tech,certainly no robot arms, but can maybe show you some possibilities for getting surreal with different physical lighting manipulations.

Results look beautiful. Top left with weird reflections looks interesting

The best result is the one where it goes behind the glass jar. I think you could redevelop this project with more interplay between your optical system and the environment it’s moving through. It’s most interesting when the light is bounced off of something, or transmitted through something. Study Moholy-Nagy’s “Light-Space Modulator” for inspiration. https://www.google.com/search?q=Moholy-Nagy+“Light-Space+Modulator”&source=lnms&tbm=isch

We’re really looking at something we don’t see with our naked eye. Weird. Kind of magical.

Beautiful results. The idea of “purposeful imperfection” is very interesting. Concepts of “realness” and “humanity” in computational, completely controlled processes are also very compelling.

^ +

I want a process gif SOOO BADLY of you controlling the robot arm

It’s lovely to see light as a creature. It looks alive. + +

I love the colors as well. Was the led green?

If you had another robot could you program sweeping camera movements to complement these compositions? They are lovely but very static with a non-moving camera

Mesmerizing

Nice work. Reminds me of Picasso’s light drawing. I’d love to see specific application of your work (e.g. tangible version related to AR spatial drawing). Prof. Jean Oh from the Robotics and Prof. Minha Yang are working on a project that would enable robots to express the way how it is perceiving the world in a poetic way. This would be a good reference for them. Please reach out to Jean Oh. http://iam.jeanoh.org (let me know if you need introduction – Austin)