Memory Rooms

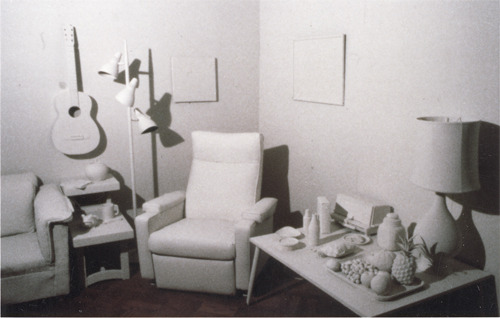

This is an interactive projection where the artist used photogrammetry, clay, ink, pigment, and paper to recreate her childhood bedroom.

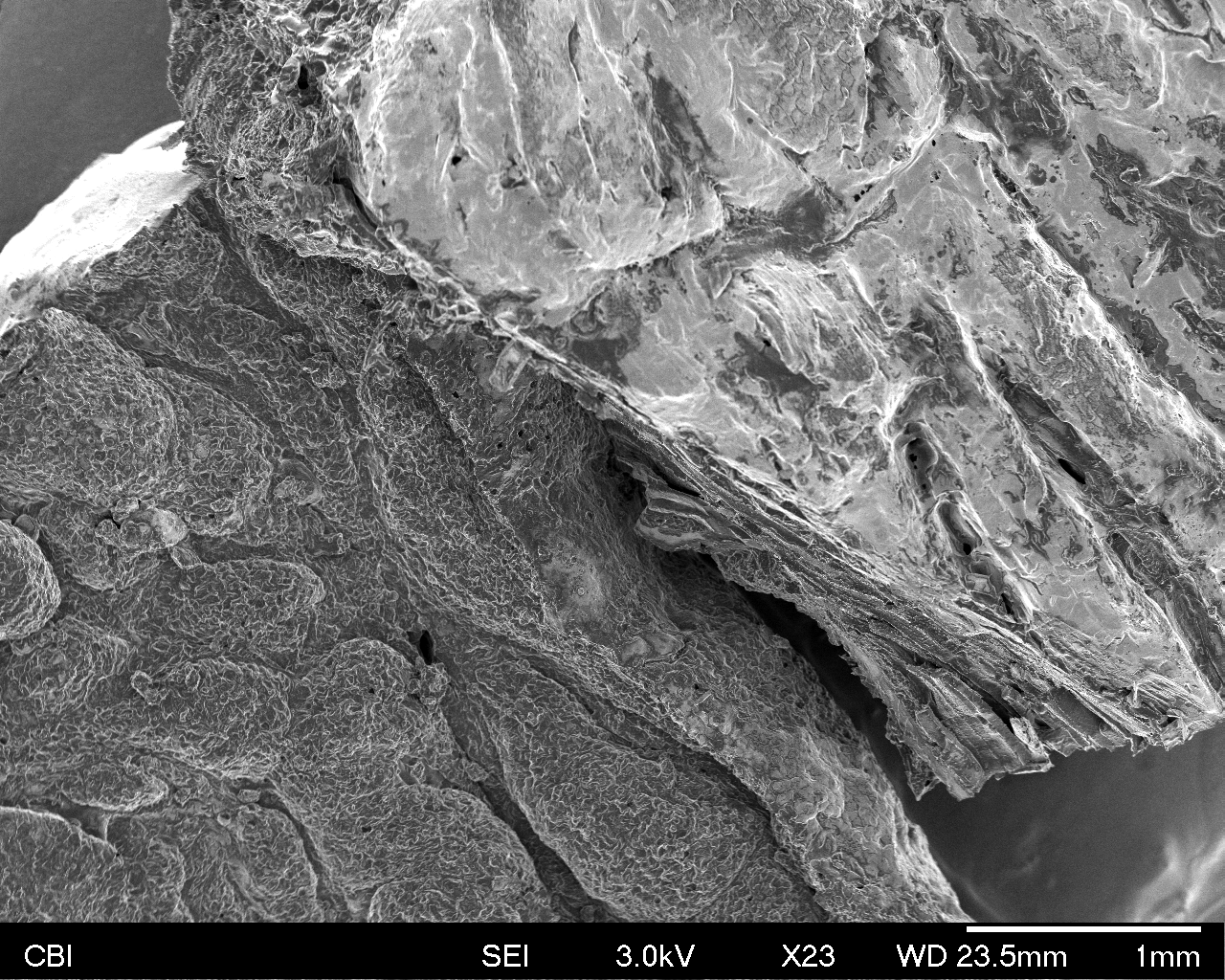

My project was an attempt to explore the parallels in capture technology/interactive media/virtual space to memory. I wanted to capture, in the most honest way, my childhood room as it exists to me. I drew and modeled in clay everything I could remember from the space using no sensory references– the walls, the furniture, the floor, etc. I then took all the pieces I had created and integrated them into one coherent representation of the room. In Unity, the ink drawings became planes that represented walls in the virtual space. I used photogrammetry to capture the clay models I made and put them into the virtual room as well.

Due to photogrammetry errors, the misalignment of angles and perspectives in my drawings, the imprecision of my memory, and the personal hand-drawn, hand-molded aesthetic of all the assets, the visual nature of the piece was less realistic than most representations of real world places. I used the same techniques and colors when creating the drawings and models so the whole room has a cohesive feel to it, as opposed to how the room would have looked in reality: the eclectic collection of manufactured clothes, books, bed sheets, and furniture. I think these qualities are significant because this is the version of the room that is integrated into my identity; the things I remember and the way I remember them is all that matters in this representation.

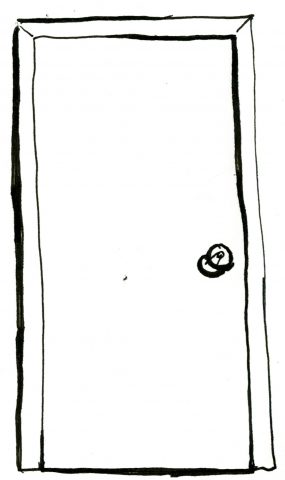

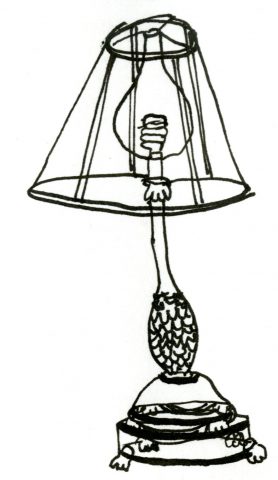

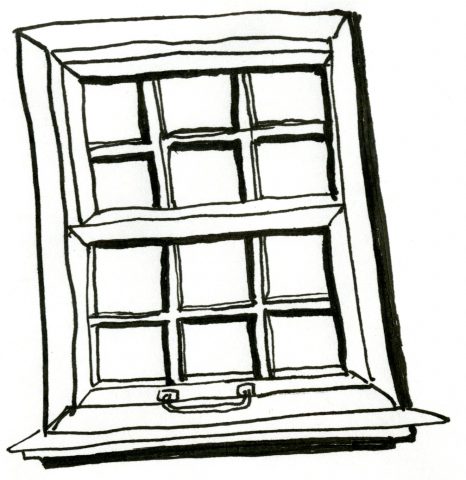

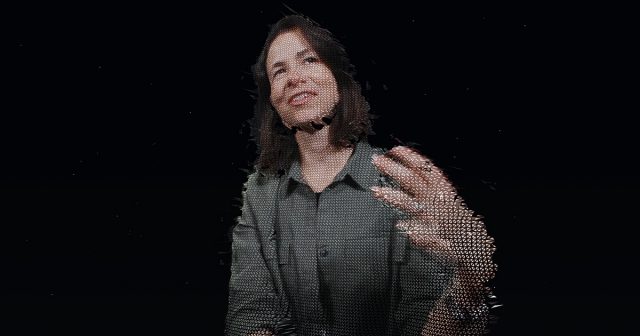

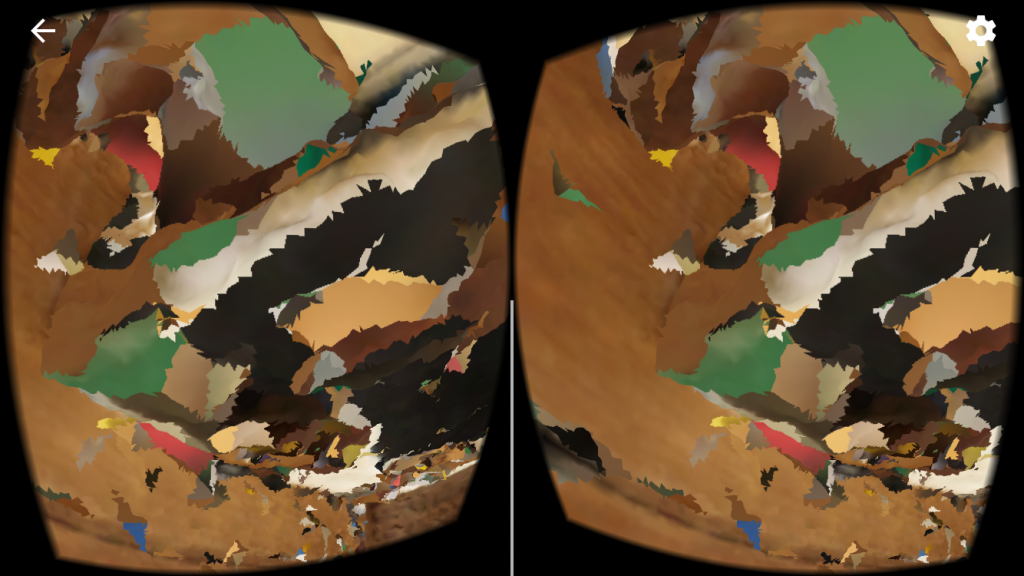

I exaggerated the immateriality and intangible qualities of the piece by placing the wall and furniture planes at incorrect scales and positions in Unity so that the room appears “normal” from only one perspective. If that perspective is changed, elements of the room that were remembered and drawn separately (the furniture, doors, and windows, etc) jump out at different depths of field and must be viewed independently of context of the whole room. In this way, the experience of the user is fragmented and incorrect, deceptively concrete, like the experience of remembering something or experiencing anything in a virtual space. I also projected onto a warped, semi-translucent surface in order to emphasize, again the lack of objectivity and precision but also to emphasize the domestic, personal subject matter–my personal bedroom.

My project is interesting because I find that people’s identities are rooted deeply in their memories (of childhood, of their hometowns, houses, possessions, etc) and this is especially true for me. The evolution of capture technologies (like photography, cinematography, audio recording, photogrammetry, videogrammetry, 360 video, 3d interactive experiences, etc) has and will undoubtedly change the way people remember and self-identify. For example, photographs affect the way we see ourselves and form perceptions of who we are. Before cameras, people could only re-experience their childhood via memory.

The expectations we have of capture technologies are in some ways similar to those that we have of memory, and in many ways people expect to use tech as a surrogate or reinforcement for memory. I think a misconception about this tech is that we’ll be able to record and re-experience the events objectively, that recordings are more trustworthy than memory and that our perceptions will be more impartial. But the pitfalls of memory and technology as a way to store our identities and re-experience them at will are similar. Just as we can never remember a moment completely accurately, we can’t (yet) capture the exact experience of a moment with technology. We will always be looking, remembering, and recording through the biased frame of human perception.

For this work, I was most inspired by Displacements by Norman Naimark. His work is a time-based projection piece where the past of a room is made visible in the present by projecting footage of a room onto itself. I love how his work is oriented physically in one space and how the passing of time is exaggerated and anatomized by the contrast: the past and present states are both extremely clear and easy to differentiate between, but they coexist in the same space and blur together. Besides being visually appealing, the piece is suspenseful because of how the projection never reveals everything at once, but rather only one window at a time. Displacements provokes thought about the recording of past events, the re-experiencing of captured events, the strong link spacial connection between past and present, and most importantly, the inherent dependence of a present state on it’s history.

My piece drew on Displacements in a number of ways. Similar to Displacements, I wanted my work to induce a sense of misalignment and I hoped to provoke thought about capturing and re-experiencing something and I used projection to do so. However, my work was much more personal so that although it is heavily dependent on location, the connection is only truly apparent to me, as the only one who’s lived in the real room. I tried, like in Displacements, to reveal connections between what exists now and can be seen, and what existed in the past or what can’t be seen. Unlike Displacements, however, my work also strove to encompass ideas about personal identity as it relates to remembered spaces and recorded or reconstructed spaces.

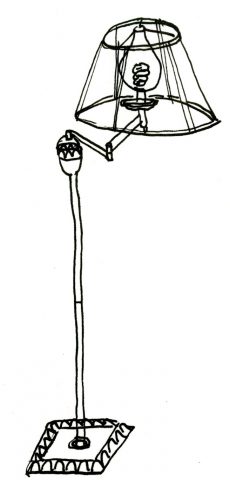

I was also inspired by Fritz Panzer’s wire sculptures of furniture. His work is described as “gestural contour drawings, creating the volume of an object through a gossamer-like outline that seems to gradually dissipate…an almost ethereal experience, requesting the viewer to rely partly on memory and recognition.” His work, for me, evokes the feeling of spacial vacancy. His sculptures indicate at something that is NOT present and I wanted the same quality to exist in my work, especially with the furniture. In my renderings of furniture, I made the background invisible so that the ink line drawing in space imitated one of Panzer’s wire sculptures. Like his sculptures, my room is only coherent from a certain perspective.

.

.

For me, this project is not finished. I would love to integrate more rooms, more furniture and more movement. I would love to exhibit the work in a setting where I can furnish the area around the projection to be reminiscent of the virtual content. Also, I am considering including the original room into my work in some way (perhaps as a series of photos or a 360 photo or a 3d scan in a companion project) so that the connection between the real room and this remembered/recreated project is stronger. Evaluate your work. Where did you succeed, what could be better, what opportunities remain.

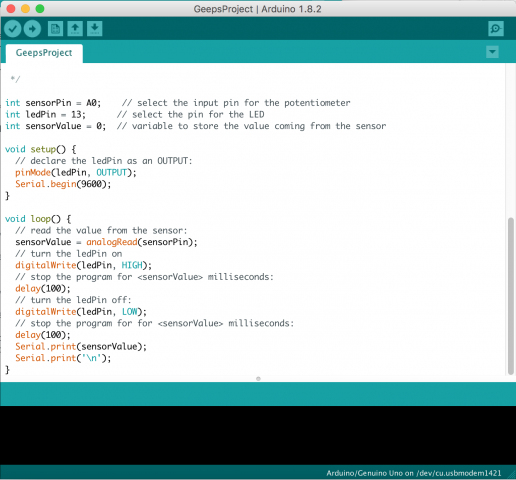

Making Of

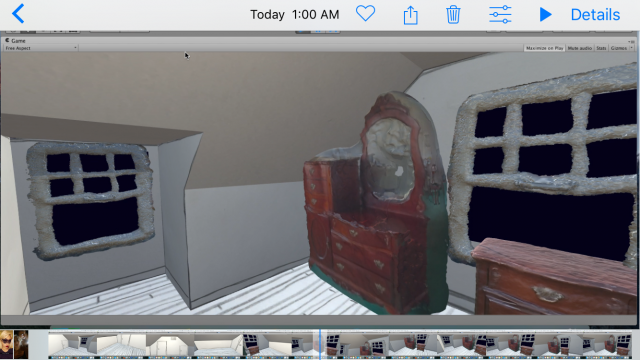

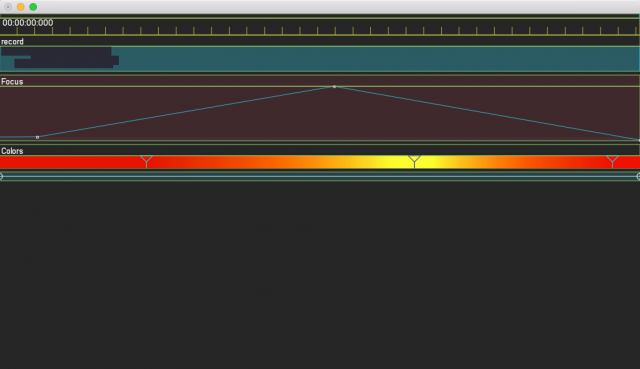

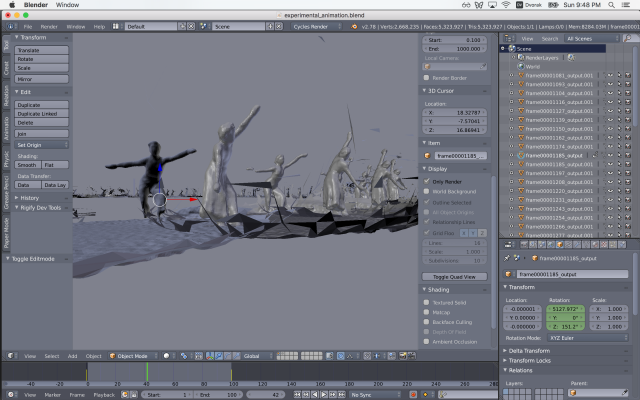

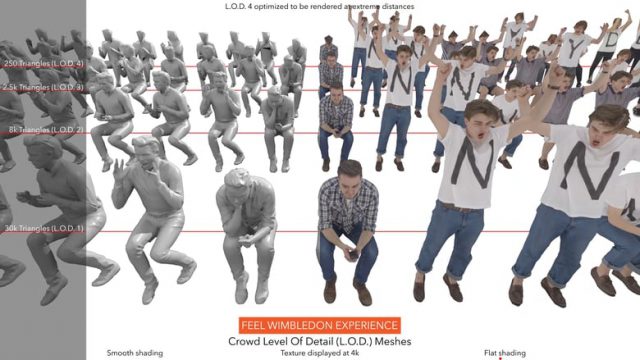

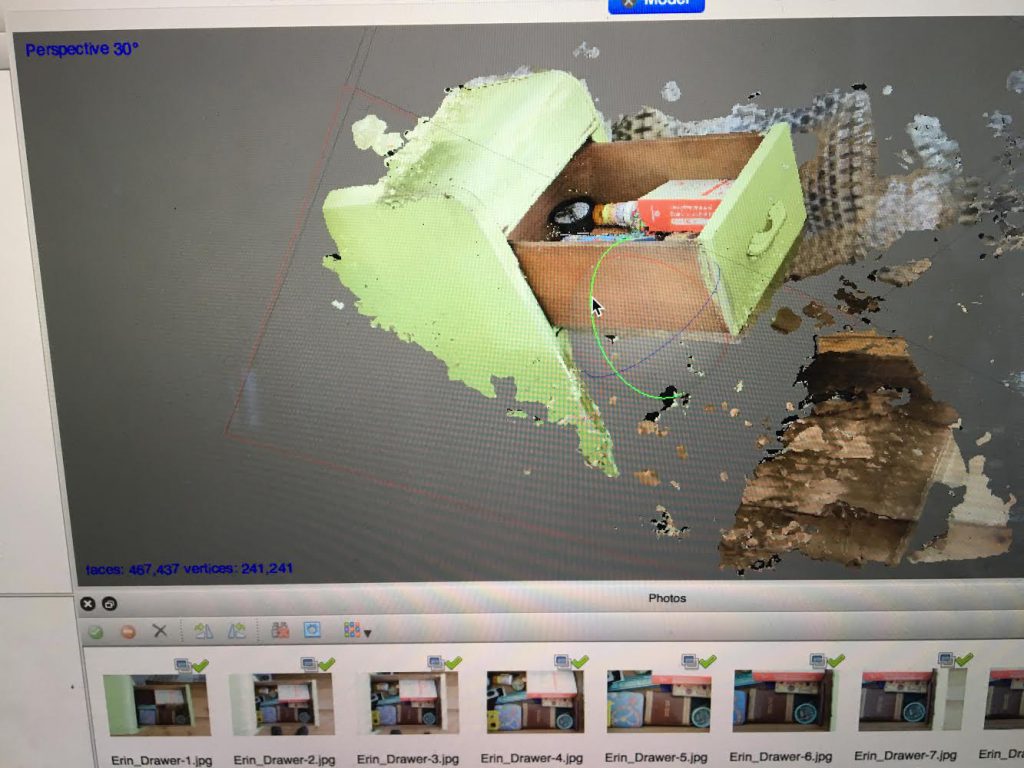

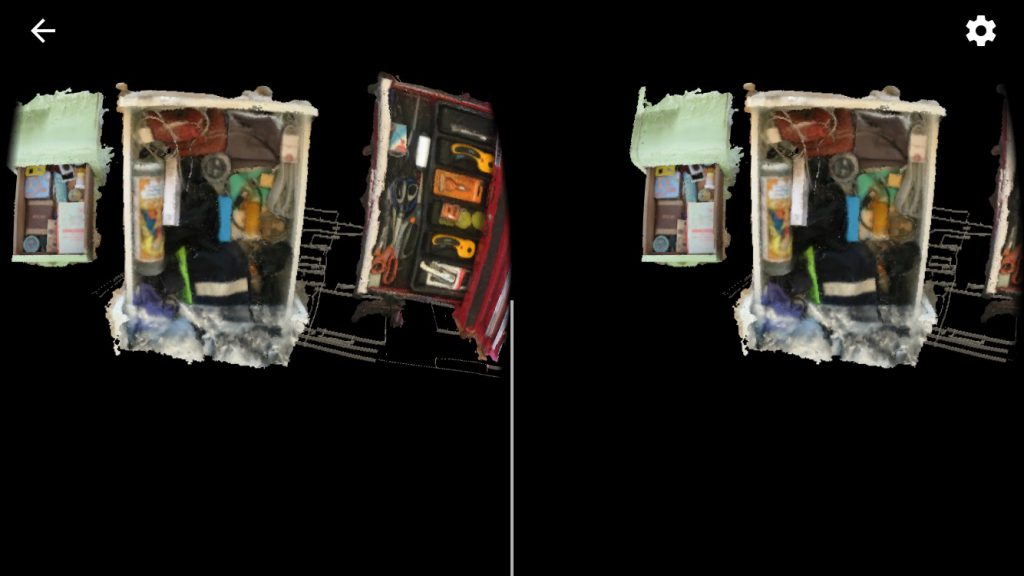

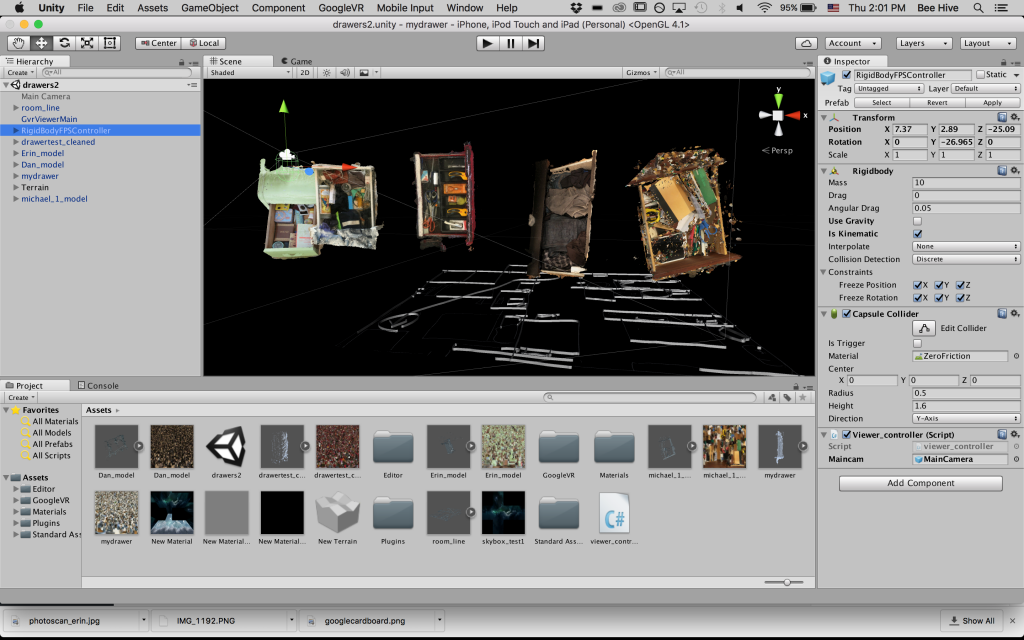

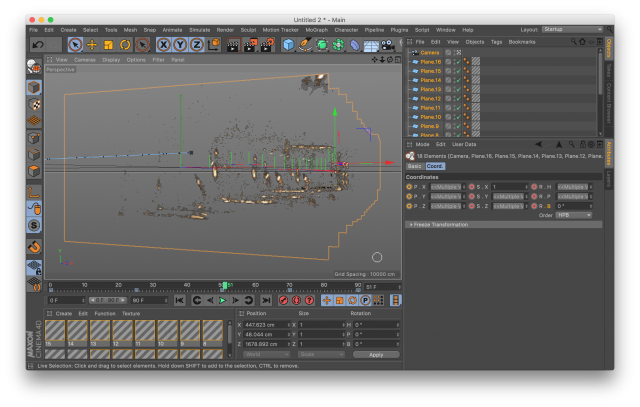

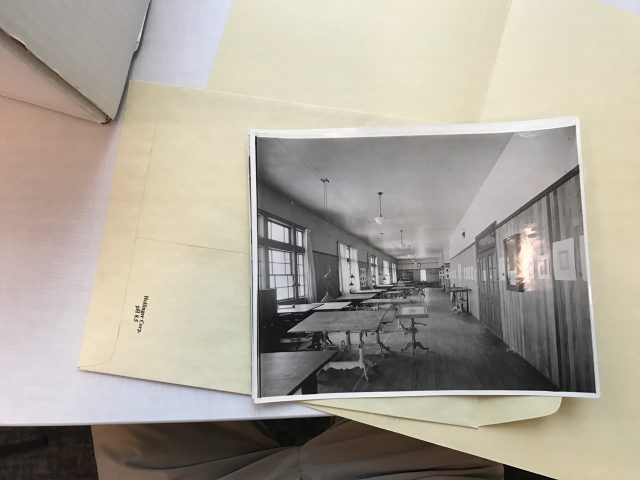

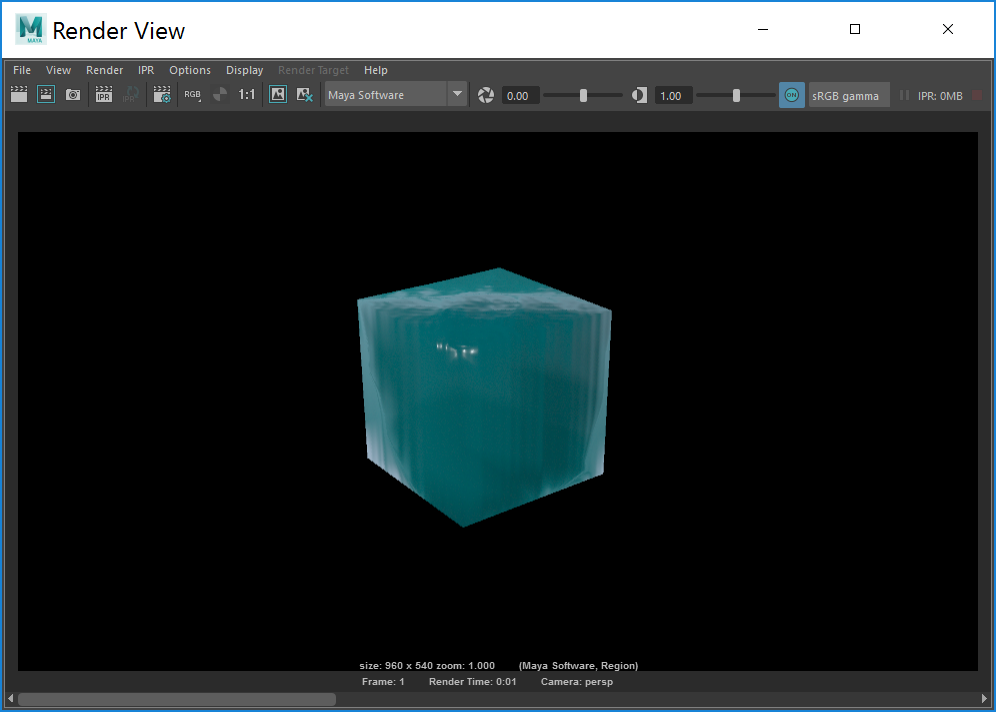

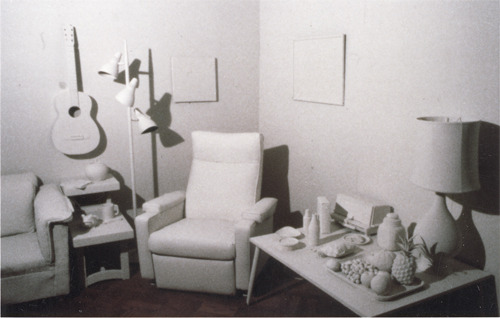

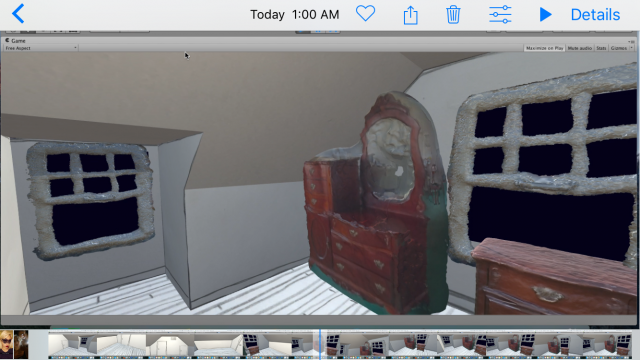

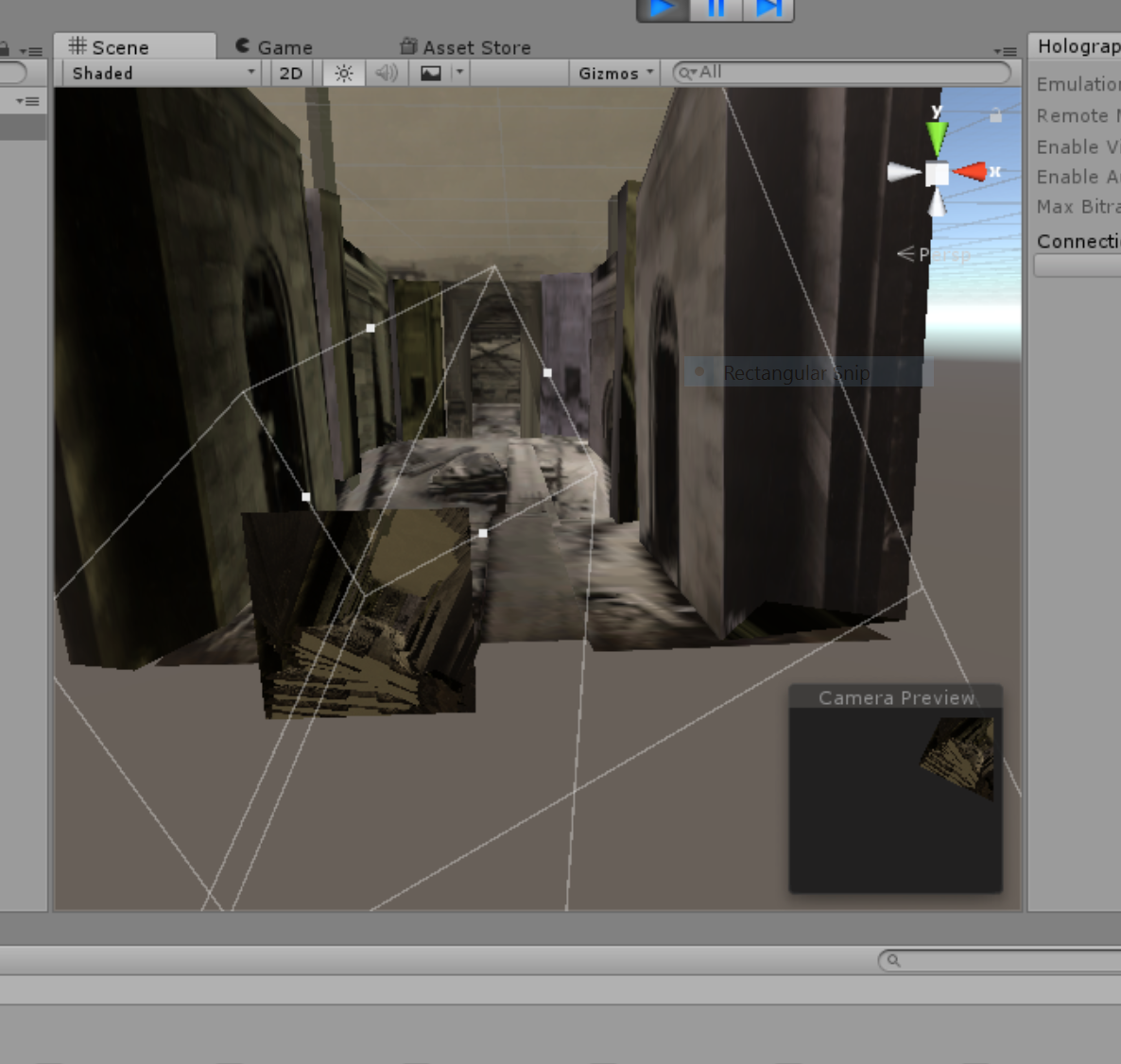

In the beginning, I planned to just re-model my room in unity as a full, solid single object with walls, a floor and a ceiling and I planned to used photogrammetry to capture my actual furniture. This is what it looked like:

I changed my mind as I realized that these techniques did not suit my project, which was about the vagaries of memory, virtual space, and capture tech. Instead of the concrete and uniform room, I used separate drawings as planes which looked more ethereal and pieced together and I used clay models made from memory. These techniques were more in keeping with my concept: to create from memory and highlight the imprecision of memory and the false-ness of virtual spaces/capture technology.

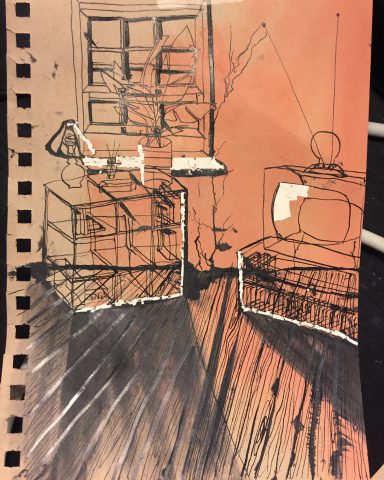

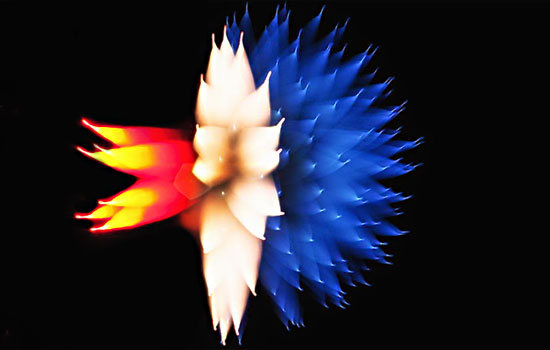

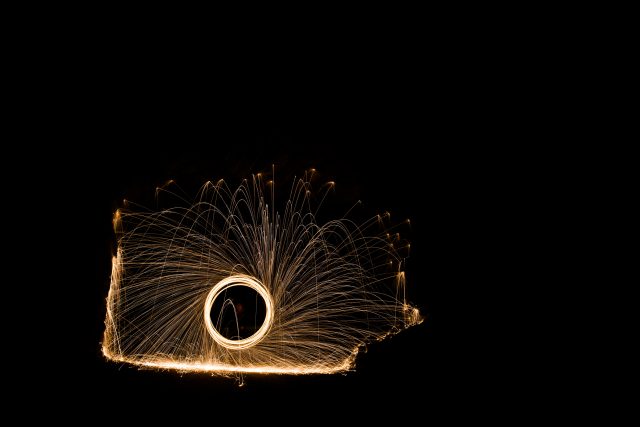

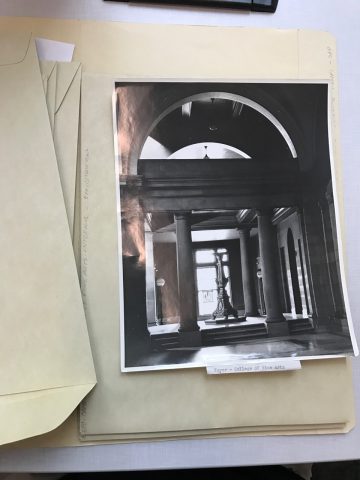

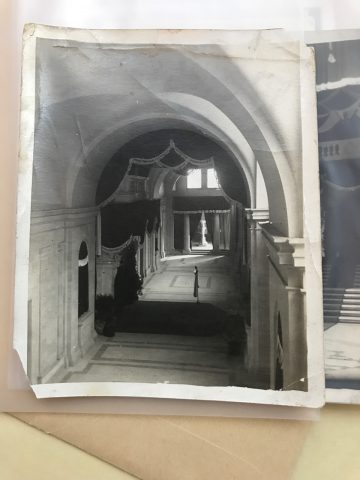

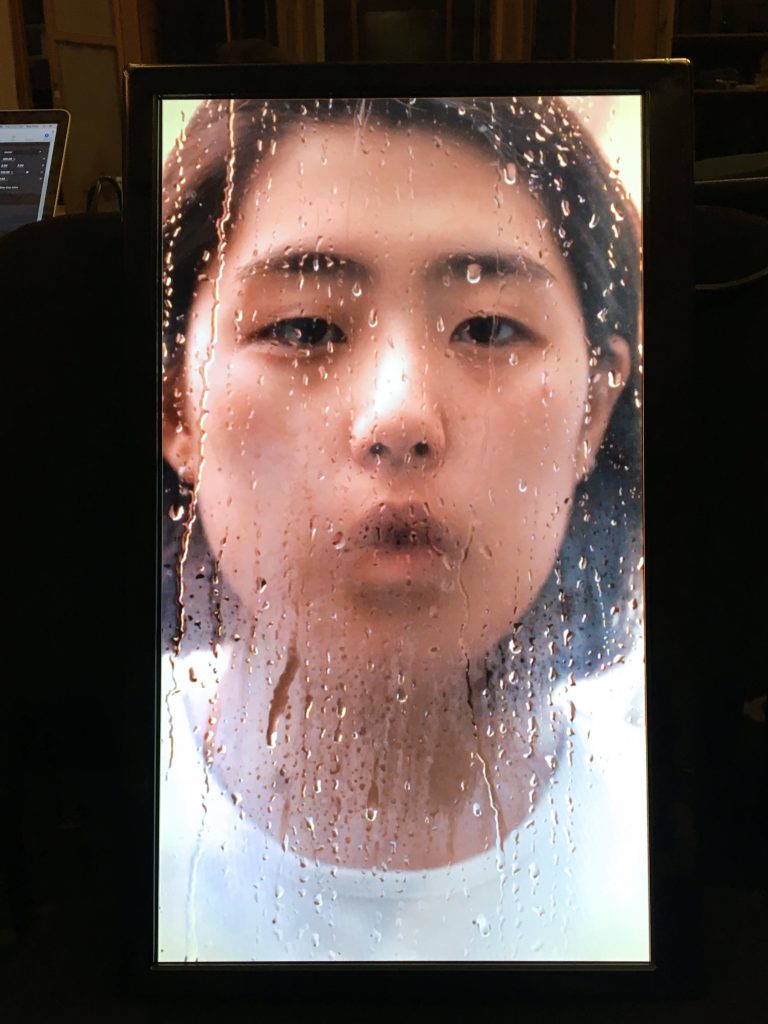

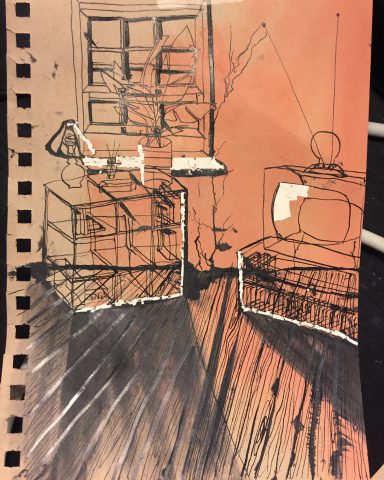

Around this time, only tangentially related to this project, I had been making a lot of sketches using ink, pigment, and whiteout depicting different fragments of memories I had. Here’s one of my sketches:

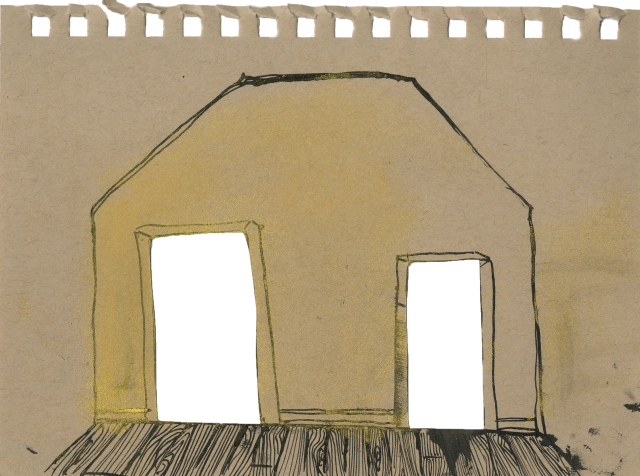

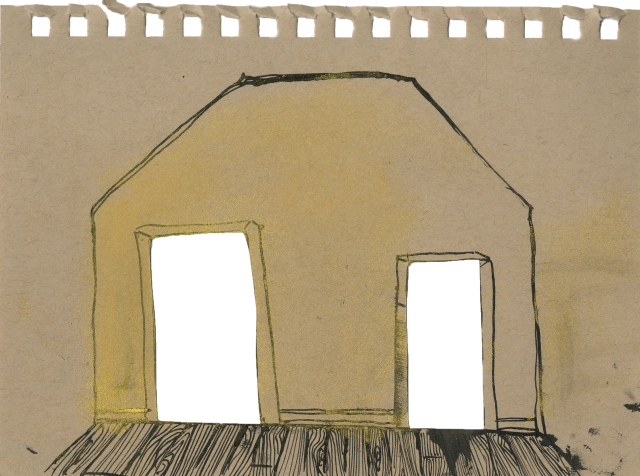

I used these sketches as inspiration for how the room would look. So I made a bunch of drawings which I scanned and used to texture planes in Unity:

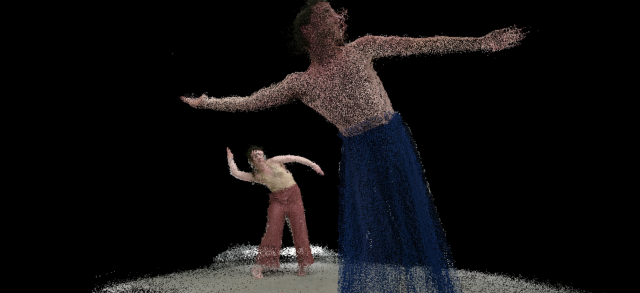

Finally, I placed the assets at incorrect depths and scales to achieve the affect below (first gif below) when the user moves to explore the room.

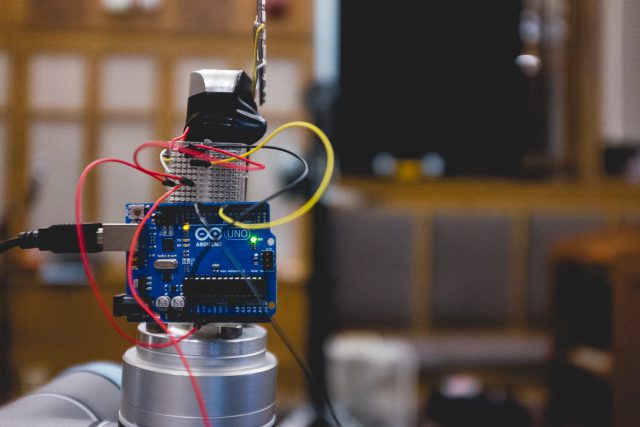

I originally planned to use a headset to present my work, but I found that the headset was too cumbersome and contained. I didn’t want the user to be totally immersed in a virtual reality environment. Instead I wanted my project to be grounded in a real space so that the user could more easily consider the relationships between present and past/virtual and real/seen and remembered. I opted for projection on a sheer, warped fabric with a kinect to track head movement so that the user would have in intuitive way to explore the scene and be naturally immersed without being taken out of the present moment completely.

.

.