Freestyle Drone Photogrammetry

http://gph.is/2mKWBsn

Download a build of the game HERE. Controls are WASD and IJKL.

Control forwards/backwards movement and roll with WASD, control up/down thrust and yaw with IJKL.

Freestyle drone flying and racing is a growing sport and hobby. Combining aspects of more traditional RC aircraft hobbies, videography, DIY electronics and even Star Wars Pod Racing (according to some), drone pilots use first person view controls to create creative and acrobatic explorations of architecture. My brother, Johnny FPV, is increasingly successful in this new sport.

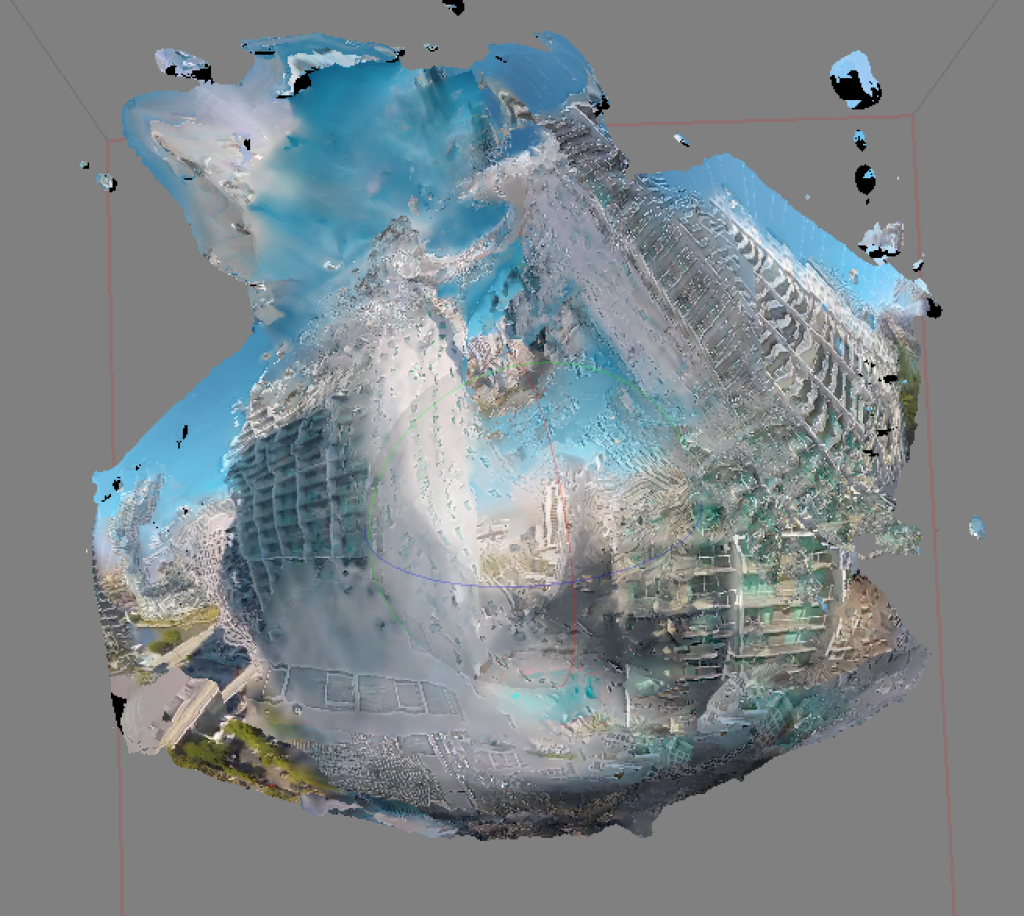

For my capture experiment, I used Johnny’s drone footage as a window into Miami’s architecture. By extracting video frames from his footage, I was able to photogrammetrically produce models of the very architecture he was flying around. While position-locked footage from videography drones such as the DJI Phantom has been shown to create realistic 3d models, the frenetic nature of my footage produced a very different result.

http://gph.is/2mLD37d

As I produced models from the footage, I began to embrace their abstract quality. This lead me to my goal of presenting them in an explorable 3D environment. Using Unity, I built a space entirely out of the models from the drone footage. Even the outer “cave” walls are, in fact, a representation of a piece of Miami architecture. The environment allows a player to pilot a drone inside this “drone generated” world.

Technical Details & Challenges

My workflow for this project was roughly as follows. First, download footage and clip to ~10-30 second scenes where the drone is in an interesting architectural space. In Adobe Media Encoder, process the video to a whole number framerate, then export as images through FFMPEG. More about my process with FFMPEG is discussed in my Instructable here. Then, import the images to PhotoScanPro and begin a normal photogrammetry workflow, making sure to correct for fisheye lens distortion. After processing the point cloud into a mesh and generating a texture, the models were ready for input into Unity.

In the end, the 3D models I was able to extract from the footage have very little structural resemblance to the oceanfront buildings they represent. If not for the textures on the models, they wouldn’t be identifiable at all (even with textures, the connection is hard to see). I found that the drone footage more often than not produced shapes resembling splashes and waves, or were otherwise heavily distorted, and were much more a reflection of the way the drone was piloted than a representation of the architecture.

In Unity, I imported the models, and almost exclusively applied emissive shaders which would allow both sides of the model to be rendered (most shaders will only render the back faces of models in Unity; because my models were shells or surfaces with no thickness I needed to figure out how to render both sides). I found that making the shaders emissive made the textures, and therefore the models, much more legible.

I am still very much a beginner in Unity, and I realize that if I were to develop this scene into a full game I would need to make a lot of changes. The scene currently has no colliders- you can fly right through all of the structures. Adding mesh colliders to the structures made the game way too laggy, since the scene contains millions of mesh faces. Regarding the player character, making this into a true drone simulator game would require me to map all the controls to a RC controller like an actual pilot would use; I don’t yet know enough about developing player controllers to make this a reality. I also need to research more about “reflection probing,” as I would like to tweak some things about the mirror/water surface.

With help from Dan Moore, I also explored this game in VR on the Vive. Very quickly I realized how nauseating the game was in this format- so for now VR is abandoned. I may revisit this in the future.

What’s next?

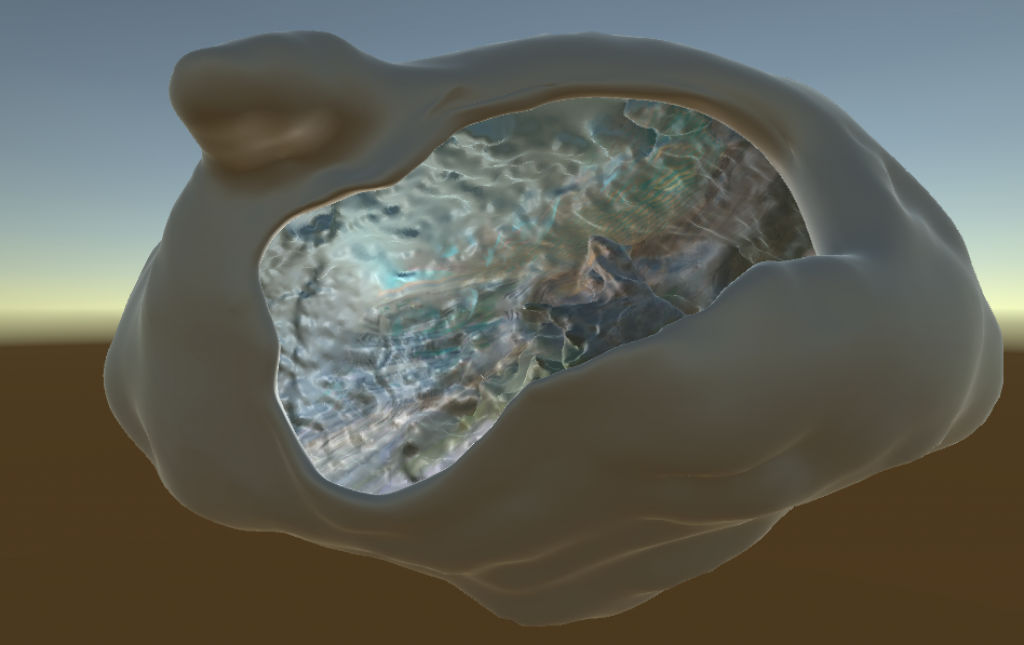

Moving forward, I would possibly like to explore tangible representations of the 3D models. Below is a rendering of a possible 3D print. I discovered that some of the models, due to the input footage capturing a 360 degree world, became vessel-like. I would like to create a print where the viewer can hold this little world in their hand, and peer inside to view the architecture.

I would also like to begin creating my own footage, using more stable drones which are better suited to videography. One possibility is to create a stereo rig from the knockoff GoPros, and film architecture in stereo 3D.

Comments from the Group Review.

General feedback from faculty reviewers: The project is caught between using the drone as a 3d scanner (for verisimilitude) vs. making aesthetically pleasing 3d models.

Worth looking into the concept of “psychogeography”.

Why reflect symmetrically?

Actually I love the “artifacting”

Is there a relationship between where you placed the models and where the structures are in the real world/in the video?

Used her brother’s freestyle drone videography to generate 3D models with photogrammetry.

“This clip translated into this model” — but it’s hard to see that image as a 3D model — would be better if it were an animated GIF showing some motion parallax.

I’m torn between the idea of capturing a specific building legibly, versus using those materials as the basis for synthesizing something ‘psychedelic’. It’s “pretty”, but there’s a sort of arbitrariness in the VR/game environment (with all of the post-hoc symmetry, etc.)

What if you put a camera on the drone in the game and did the process over again +1 ++++++++++ (that’s a pretty amazing idea) << lmfao Did you think about filming with a drone yourself? Could have been stronger if you could control the drones yourself, e.g. for 3D scanning. Those models are really beautiful. I like the fact that the models get their shape from the motion of the drone Interesting concept + sweet renderings → your vids really reflect the miami atmosphere: pastel colors, fluffy looking landscape. The environment you captured correlates pretty perfectly with the photogrammetry quality. (I disagree with what Golan said about it being too ‘artistic’. I think it look very kitschy, but it fits with “Miami” pretty well (might not work as well for a less tacky place) → i think the tension between fiction and documentation is a good thing Would love to see some rotation/angle changes in the game. It feels more static than the original drone videos. +1 What happens if u pilot the virtual drone in the same way the real drone was piloted — does the virtual imagery then match up to the video? (e.g along the inferred camera path that agisoft imagines happens) ^^ +1 It looks sort of post-apocalyptic, and also simultaneously cave-like. Especially when you can see the buildings that have been bent / distorted / chopped. Embracing the distortion/arbitrary/weirdness of it could be presented/documented in a way that highlights the weirdness/arbitrariness of drone piloting. I’m slightly conflicted, because I love the 3d models you rendered through the drone videos, and I love how it looks beautiful yet apocalyptic. However, with the gameplay and the drone model, it almost distracts me from the landscape you’ve created, especially with the drone looking so “realistic”. What if its not a drone that flies in the model? Why did you choose to depict where you brother lives/ you live? See work done by Irene Alvarado in the Fall 2015 ExCap class; she also used drones to do photogrammetry: https://github.com/golanlevin/ExperimentalCapture/blob/master/students/irene/final-project/final-project.md